Managing Reviews / Lesson #3

App reviews analysis: How to set up auto-tags and perform semantic analysis

Intro

So far, we’ve learned that your app’s average rating is one of your most important app metrics and that it influences many other factors. We’ve also learned how you can put out a fire when experiencing a spike in negative reviews. It’s now time for us to go deeper into review management strategy - where do you begin, and how can you start automating processes?

Let’s analyze the use case below, and see what tactics can be used even during the initial stages of review management.

The Case

This situation, where Support teams are overwhelmed by a huge number of reviews, is a very common problem nowadays. The good news is that there are a number of solutions - including automating certain common Support team tasks, saving time and money.

Note: this is an extensive topic, so we’ll be splitting this piece into two parts:

- Review analysis and laying the foundation for the automation processes;

- Setting up Auto-replies and best practices for using them.

To kick things off, it’s worth noting that automation is a big undertaking for any business or product. But properly setting up workflows reduces costs, reduces time required to perform certain activities, and makes the whole support process more efficient. While employees and the human mind will always be prone to impulsive and illogic decisions, automation strictly follows the rules you’ve set up.

What feedback can be automated: defining 1, 2 & 3 lines of reviews

Speaking about app stores, reviews automation, it’s also important to understand that not every review is a good candidate for automation.

Let’s see what kind of review replies you should automate, such as:

- Repeated topics and errors

- Short reviews (reviews containing 1-3 words, all emojis, or random letters)

- Thank-you reviews

- Offensive reviews & spam

We call this the First and Second line of review support. In other words, these are the reviews that can be handled by your Support team without involving your technical support or app developer team’s help.

The Third line of support deals with difficult bugs, and questions that require proper analysis. These are the kind of reviews that are transferred to a company’s internal support team for further resolution. AppFollow supports the integration of all major helpdesk systems (Zendesk, HelpShift, Helpscout, and others), and thus there are two ways you can redirect support tickets:

- Publicly: by responding to the review and providing the support email (Dear user, ... please contact our support team via support@support.com)

- Internally: redirection of the review to the internal support helpdesk system

Depending on the structure of your Support team, either of these methods can be utilized.

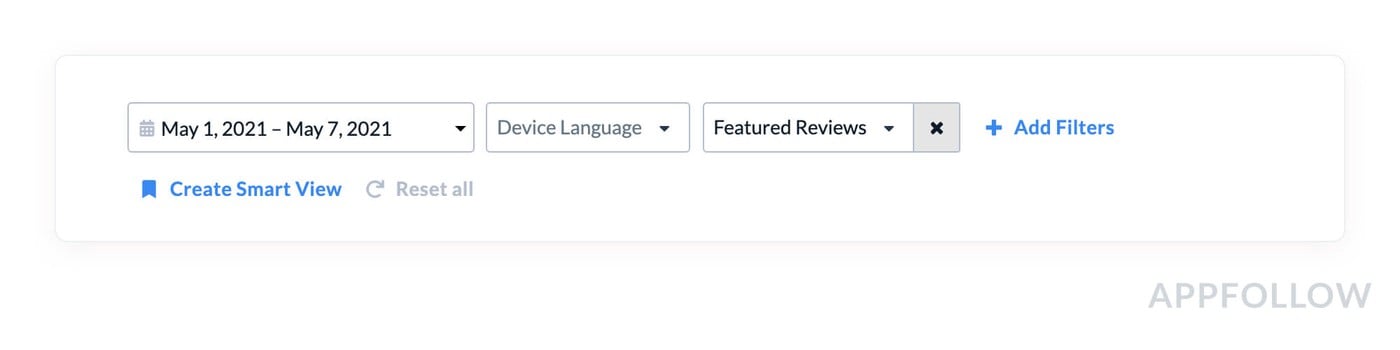

Featured reviews are one category of reviews we strongly advise against automating responses for. Featured reviews are visible on your app page without having to enter the review section itself, and have a big impact on the conversion to install rate. Because potential users are likely to focus heavily on these reviews, you’ll want to respond to these reviews manually and with the utmost consideration. In the App Store, there can be no more than 6 featured reviews, while Google Play can feature 3 to 8 reviews for each app locale.

Both the App Store and Google Play regularly update the list of featured reviews for each country/language. You can track these featured reviews manually, but there’s a risk you might miss these when updated, moreover - tones of manual work with stores switching. Alternatively, to simplify the tracking process, you can use AppFollow to see every featured review in any given time frame, with the help of the filter below:

Responses left for these reviews, much like the reviews themselves, are in the spotlight. If you are considering a templated response, remember that this is a high-visibility area, and the chance that your visitors will read it is much higher.

Cutting support team routing work: first step - tagging

Now that we’ve learned what kind of reviews should be automated, let’s see how to begin automating processes.

Do note: We’ve structured the process in this article in a logical sequence, but if your team is time-pressed or your internal processes dictate otherwise, the step sequence may vary.

Before you begin automating any given process - review management included - it’s essential you carry out a thorough analysis and cohort segmentation. When it comes to reviews, every product has specific nuances and key topics to keep in mind. For instance, in the gaming vertical, users mostly complain about internal monetization issues (e.g. pay2win or pay for progress). E-commerce products often receive reviews irrelevant to the app itself, but focusing on issues related to delivery, shipping, product quality, and more. While every app will have its own topics and segments, how to segment reviews is the same: you’ll need to perform semantic analysis.

1. Semantic analysis:

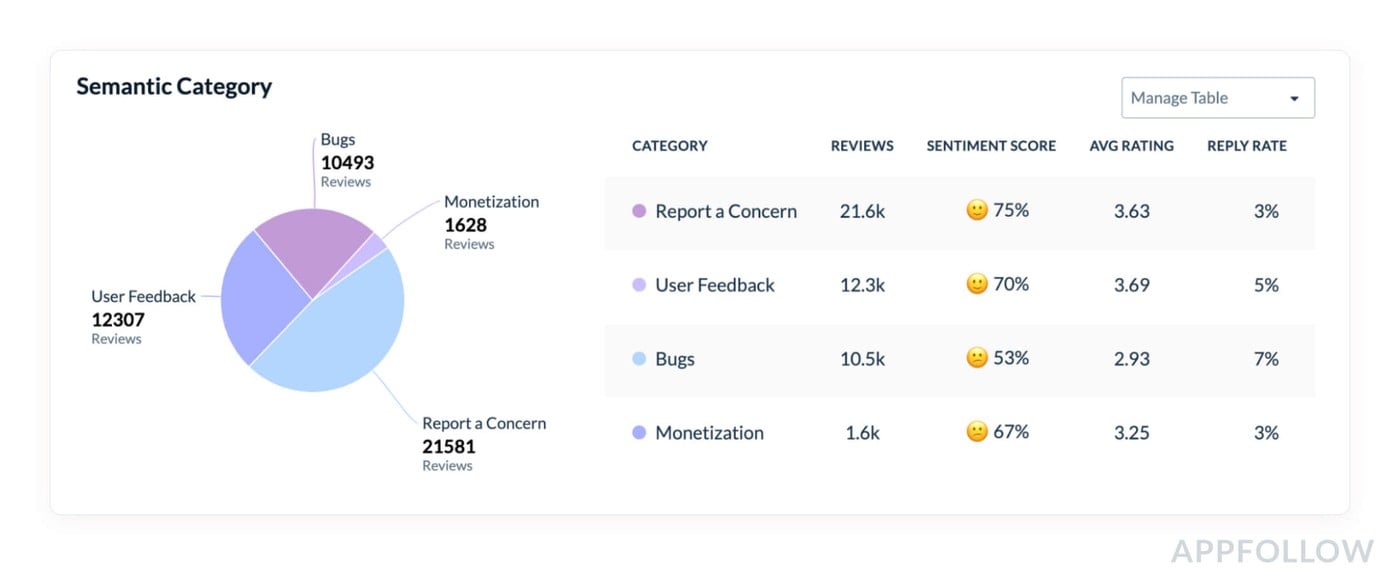

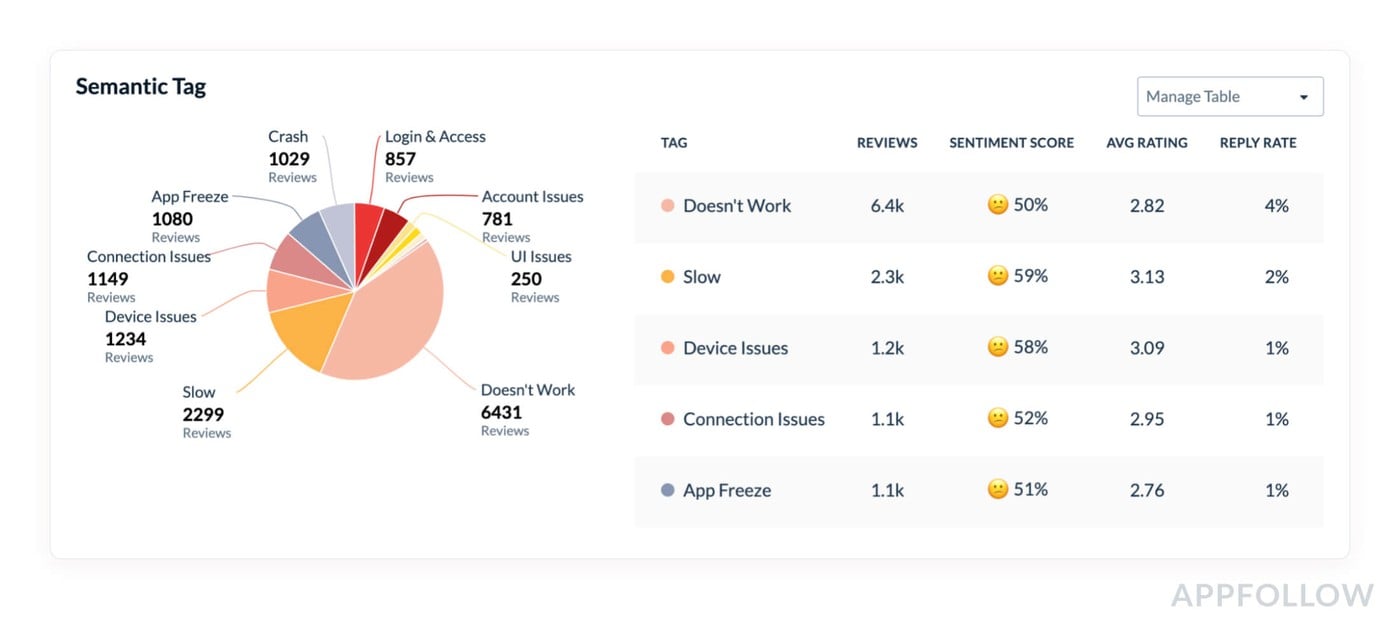

Let’s study a real use case from a popular mobile game. In the screenshot below, you can see the semantic analysis, which was performed automatically based on AppFollow’s machine learning algorithms. Based on keywords, the algorithm highlighted common review topics and aggregated them by theme. The average rating for each topic is one of the metrics that can help prioritize the work or analysis of a given cohort. The lower the average rating, the more important it is that the cohort receives immediate attention.

The semantic analysis below was done without segregating language or country. Straight away, we see that the “Bugs” topic has the lowest average rating. Let’s dive even deeper.

Below, you can see that each segment has more detailed segregation by theme: for instance, the “Bugs” category is divided by problem types - App Freeze, Connection Issues, etc.

Looking at the data provided above, “Doesn’t work” and “App Freeze” segments are more critical compared to the other segments. The number of reviews for the first is also extremely high, so by prioritizing this category, you’ll have a much more tangible impact. It’s likely to also positively affect other metrics, such as Reply Effect and Average Rating (once users possibly update their reviews). That’s why this segment is a good one to start structuring your workflow and automation rules with.

Thanks to the automatic semantic analysis, you can quickly discover the more relevant topics in your review section and properly assess the workload ahead of you. However, semantic analysis is just the first step that will help you navigate large volumes of user feedback. To fine-tune your Auto-replies (more on that later), we need to more thoroughly analyze these reviews. In the previous chapter of our academy, we learned that a single review topic may have more than one angle, and you need to find out more about them in order to create a better, more relevant review response. Let’s take a look at another method that will help you achieve this.

2. Auto-tags

Semantic tags are a quick and easy way to tackle a higher volume of reviews. However, in some cases, you’ll receive specific reviews with topics that aren’t covered by semantics - or where a single topic will contain more than one complaint. You can note and categorize these sorts of reviews with the help of manual tagging.

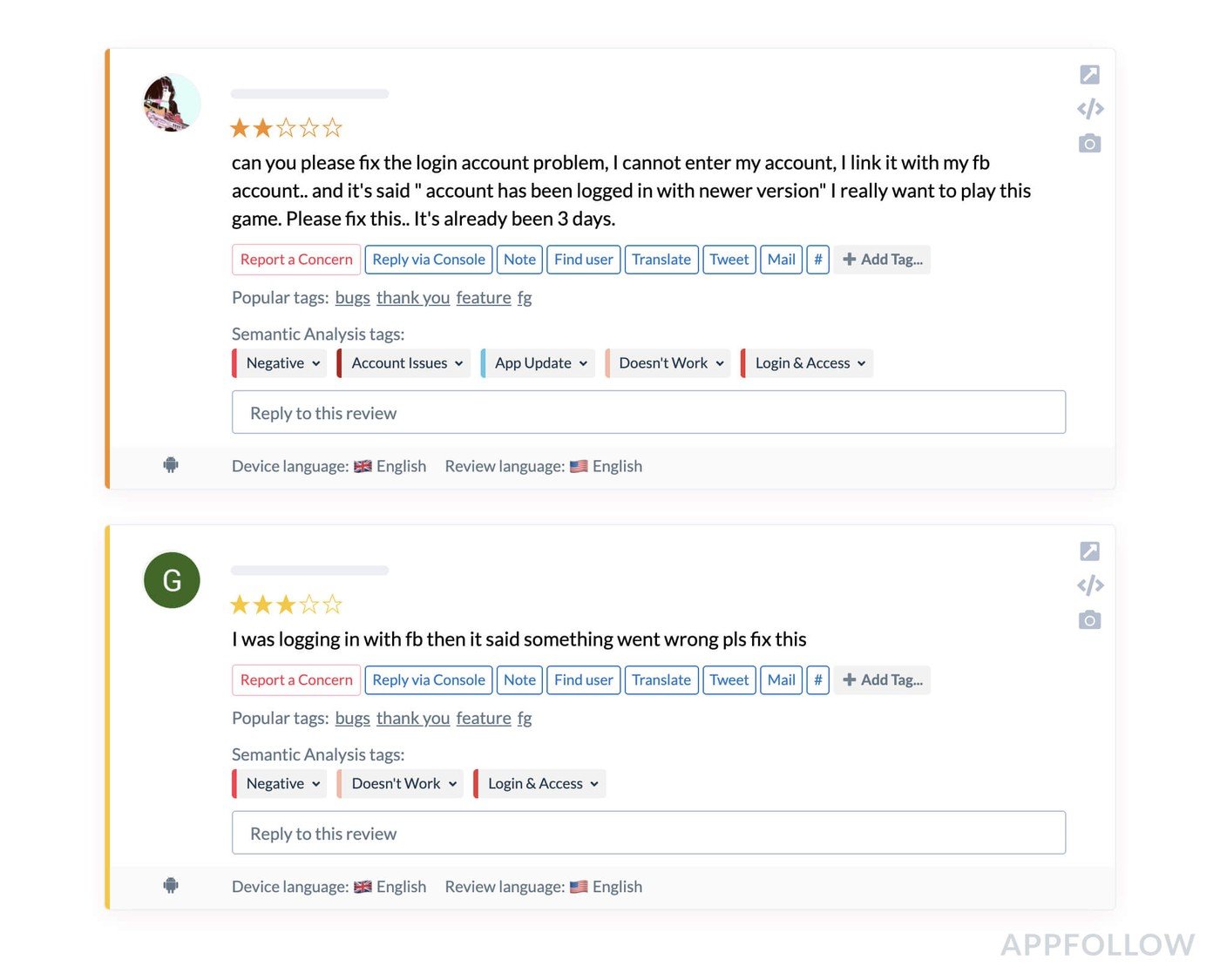

For instance, when analyzing the “Login and Access” review semantic category below, we learned that many users were experiencing difficulties with logging into the app with Facebook, specifically. This sort of bug is known by the developer and requires the user to make a certain number of steps to resolve the issue, so when replying to this review, you’ll want to provide some brief instructions on how to do that.

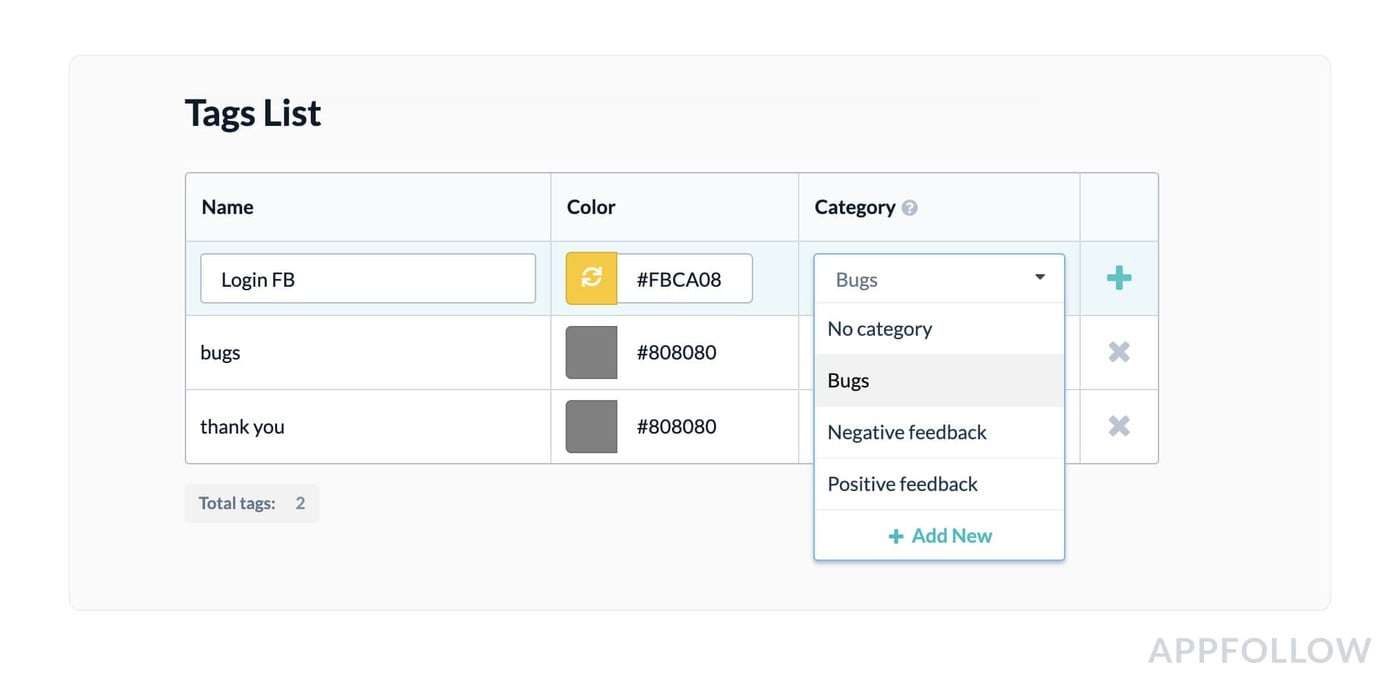

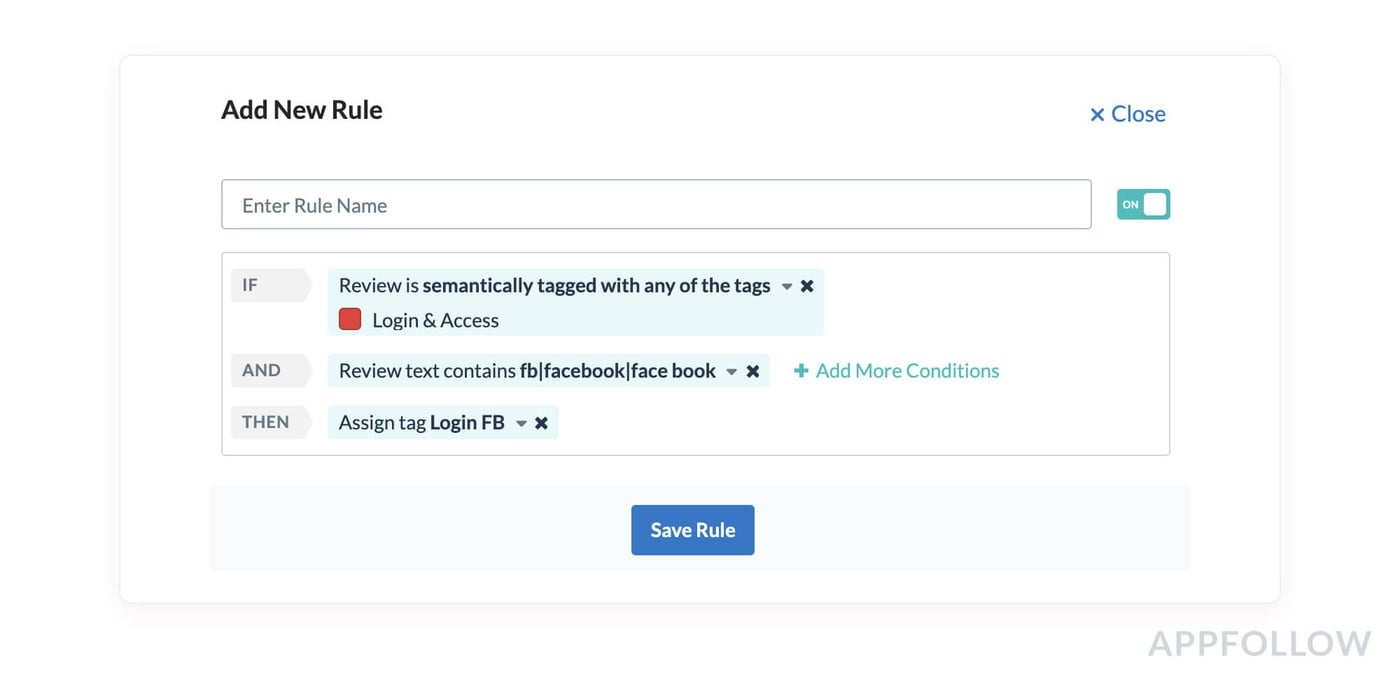

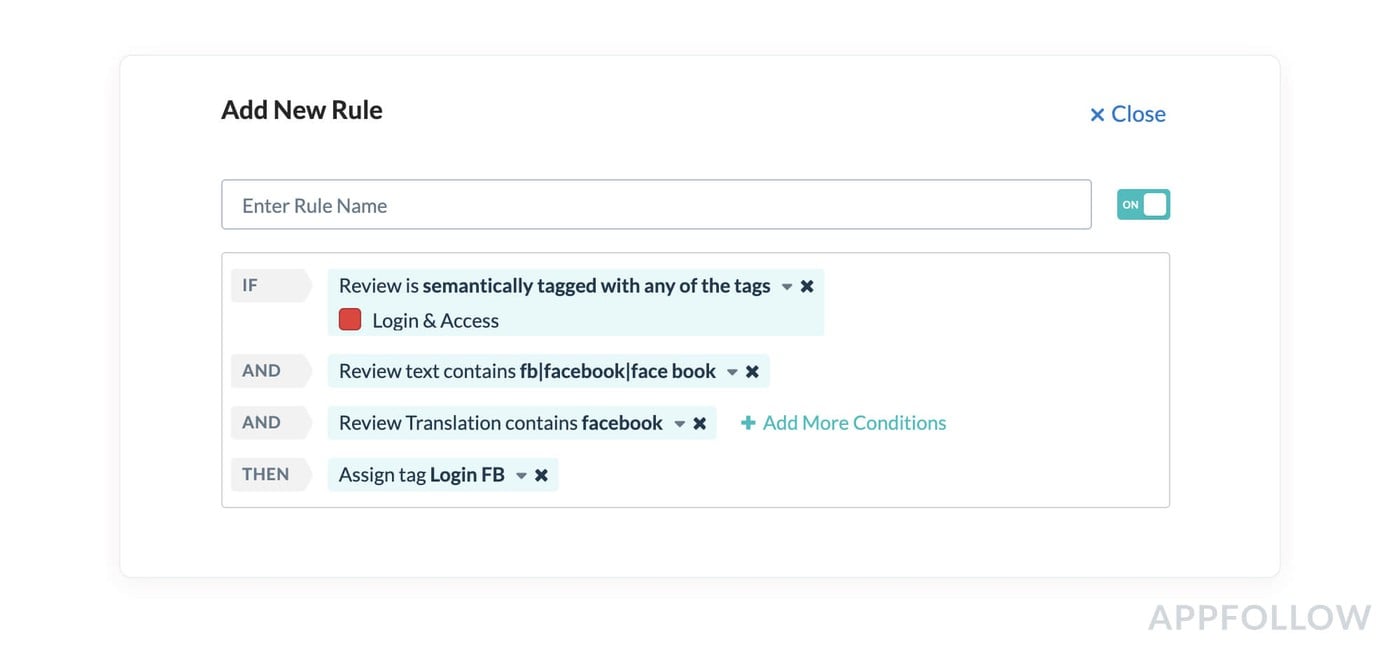

To segregate other reviews from these particular Login issues, let’s set up a rule that will gather these reviews under a custom tag. You can see an example of this below.

After creating this tag, you will then create a rule, which will be tagged automatically whenever it fits the required criteria. Based on the same principle, you can analyze and tag just about any review cohort.

Do note: the rules provided in the example are provided in English. When it comes to multilingual projects and reviews, you should stick to a certain number of tactics:

1. If your team has decided to reply to all users in English, the rules for tags and Auto-replies can be unified by adding a Translation rule. Every review in any language will be translated into English, and the system will then recognize every English word set up in your rules (here it’s Facebook). This allows you to apply the same tags in all your required languages. You can see what the setup looks like for this below.

2. If your Support team is multilingual, and you use translators to help you translate text to certain languages, you can create these tagging rules for every required language by using local keywords and relevant review topics.

Looking for more tips about managing multilingual reviews? We have more advice in the next chapter of our academy.

Semantic analysis and auto-tagging as a stable source of insights for the product

While setting up auto-tagging and semantic analysis will differ slightly depending on your app’s vertical, it’s a great way to offload manual labour and gain valuable insights from your users. Above, we looked at just one possible way to subdivide a topic by combining semantic and manual tags for more targeted responses. However, auto-tags can also be tied to both the review rating and the length of the review. This is often useful for segmenting responses from positive (5 stars) or very negative, but meaningless reviews - ie, those that don’t contain any constructive feedback. While each project or app will have its own specific feedback nuances, they will all benefit from high-level analysis. This not only simplifies the support team’s work but is also an excellent source of user insights for the wider team (more on this in the following chapters!).

Now that you’ve gotten to grips with semantic analysis and an auto-tagging tool, you can quickly analyze and segment user reviews. This will help you to monitor the dynamics of user mood and track outbursts of problems, as well as becoming the foundation for building your automated-response strategy.

In the next chapter, we’ll be sharing more on how to build your auto-replies strategy, and how you can improve your metrics while tackling a higher caseload.