Managing Reviews / Lesson #6

How replies affect the average rating

Intro

In most cases, the rating request window generates the majority of reviews that do not contain any text-only stars. Let’s now turn to the second important cohort of reviews – namely, those with text – and discover how you can influence the Average Reviews Rating metric.

In both the App Store and Google Play, users have the ability to edit their reviews - making changes to the text or the rating itself. While the rating request prompt helps to generate new reviews, the Average Rating by Reviews metric focuses primarily on old or current reviews and their updates.

For Support teams, one of their main goals is to solve their users’ questions and positively influence change in a review rating. The moment a review is updated, no matter how old the original review is, it is counted as new feedback. Your average review by text rating and overall average rating will also be updated accordingly.

This is why it’s critical to build a solid response strategy for your Support team, and where the effectiveness of a chosen tactic can have a big impact on your Reply Effect metric. We have already learned that the Reply Effect can dramatically change your rating - for example, feedback with a rating of 2 increased to 4 equals Reply Effect +2,000. Let's imagine that there are a thousand such reviews, and after your Support team replies to these, 30% of users change their review to a more positive one. This means you’ll benefit from 300 more positive reviews and higher ratings – positively influencing the overall average rating of the app, too.

It's worth noting that on the AppFollow dashboard, we display the No Reply Effect metric, showing you how many reviews with text haven’t received a developer response. This update dynamic also has a direct impact on the ratings. Just as the Reply Effect is not necessarily always going to be positive, the No Reply Effect doesn’t necessarily have a negative impact on reviews. Let's study the two small cases below.

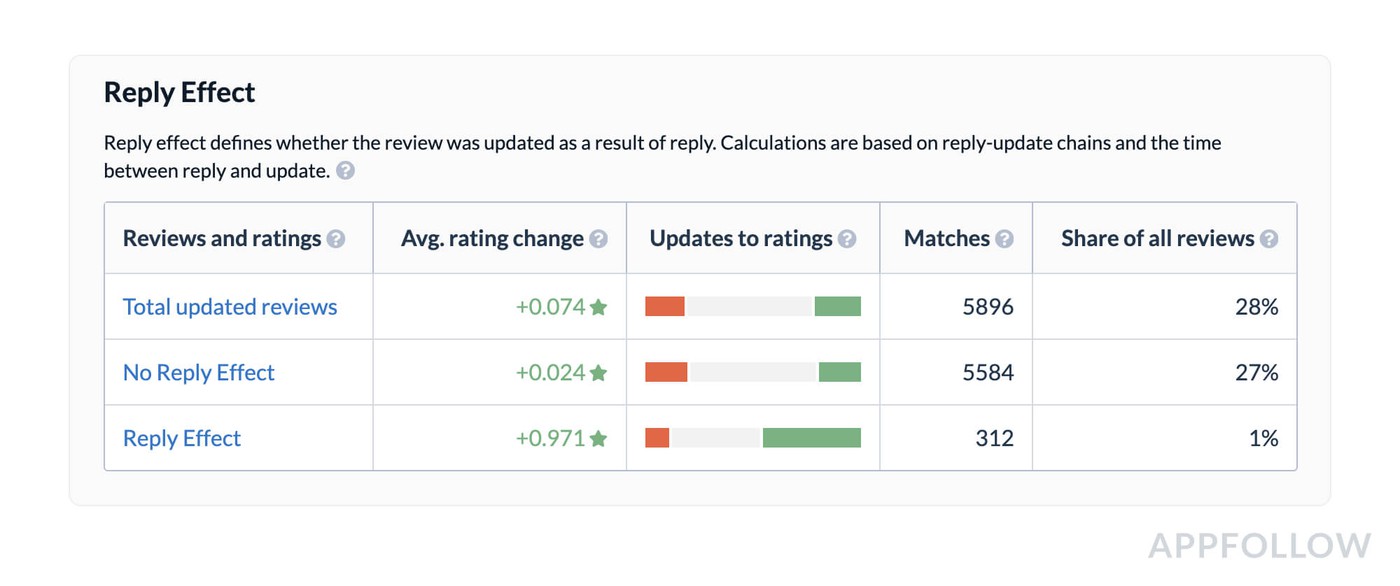

In the screenshot below, we see an overall positive trend of feedback updates regardless of response. Developer response generally increases Reply Effect, but more than 50% of users have also positively updated their feedback without a developer response.

There may be several reasons for such an increase:

- Efficient work from the third line of your Support team (ie those in technical teams);

- Product updates that solve the user’s problems/bugs;

- Loyal audience (although this generally is a rare case).

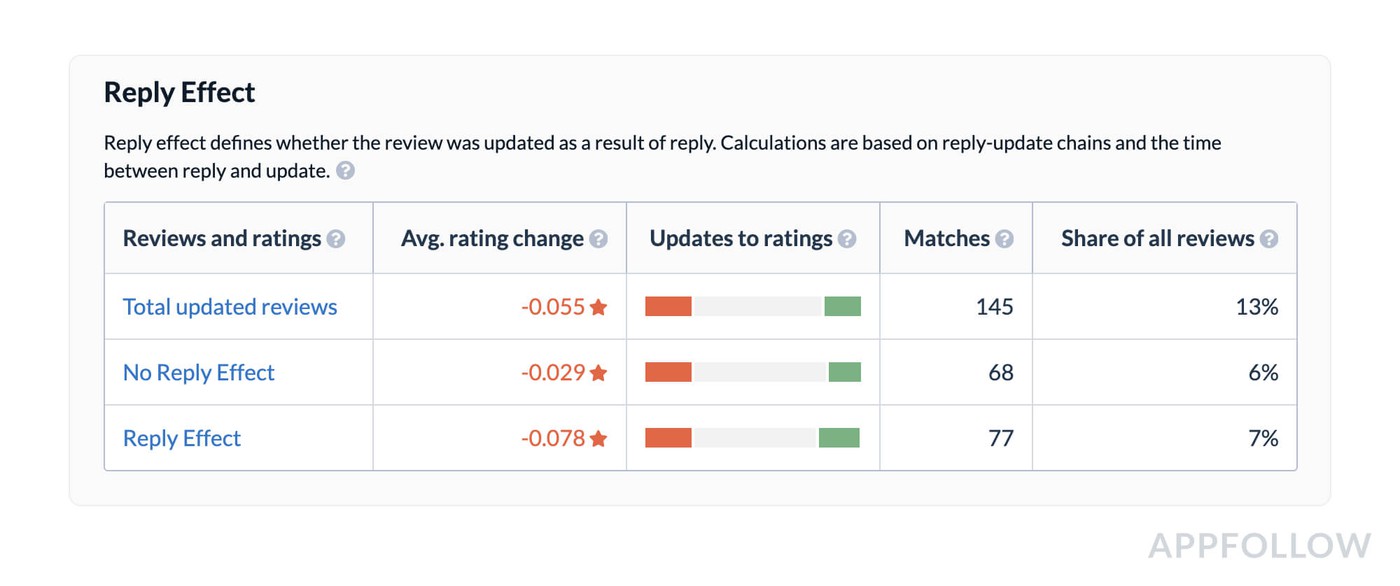

The next screenshot illustrates the opposite situation – overwhelmingly negative review updates. Looking at the No Reply Effect and decreasing average rating change, we can assume that loyal users are facing difficulties using the product, haven’t received adequate responses from Support, and, as a consequence, have negatively updated their review score. Particularly looking at the negative impact of the Reply Effect, we can see that the current response strategy isn’t working.

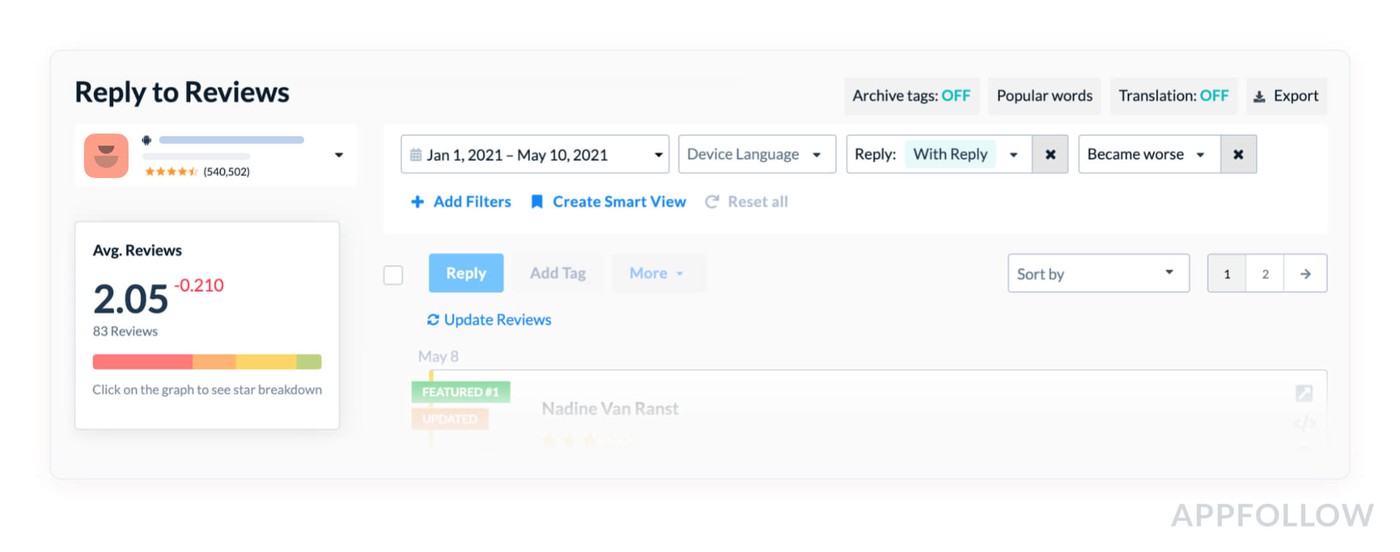

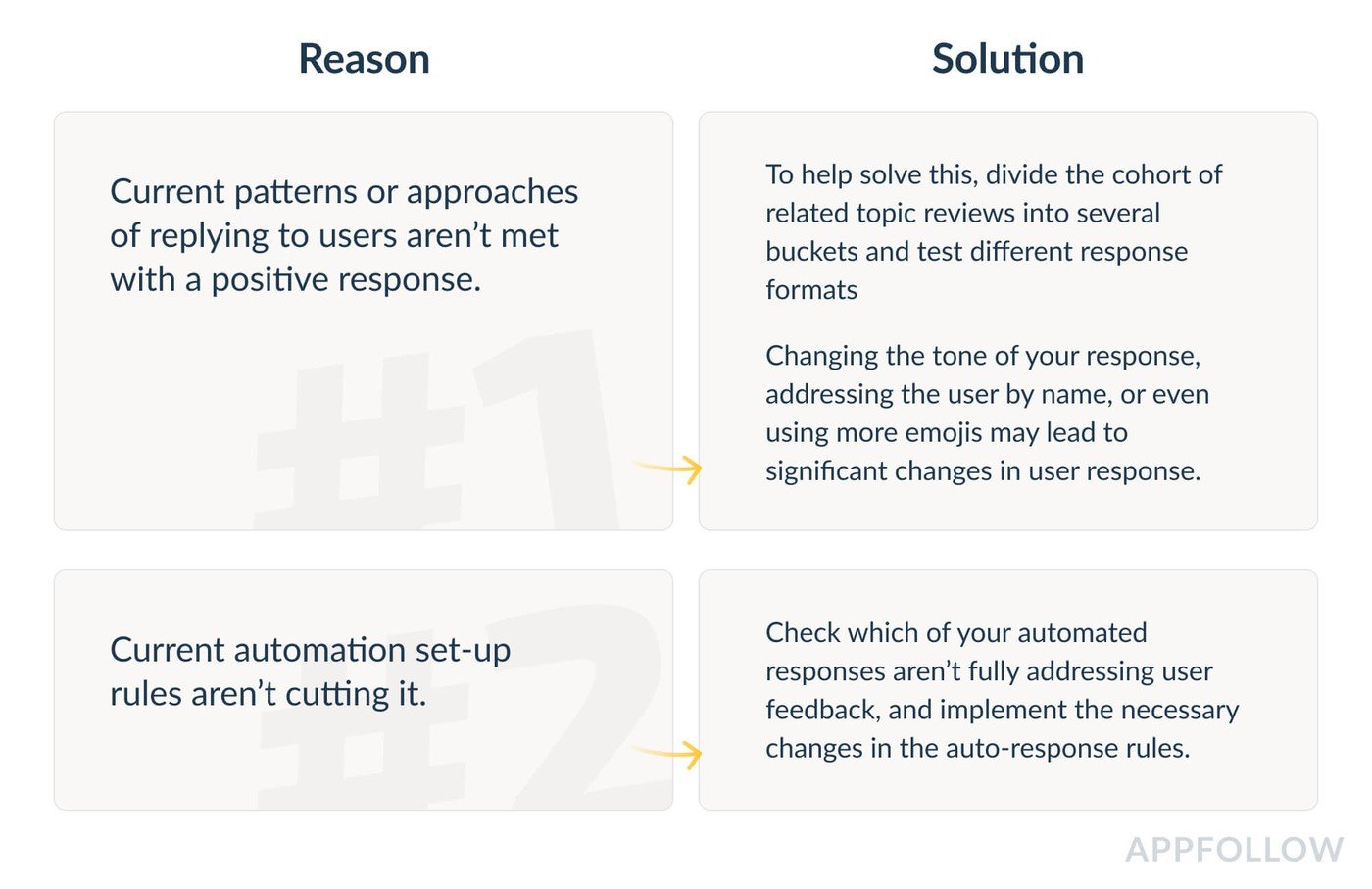

In this case, you need to filter out feedback with a negative post-response dynamic and analyze what could have caused it - as in the image below.

There are only two possibilities:

The potential impact of a positive Reply Effect means it should act as another important KPI for your Support team. Finding the best response format, using the right tone of voice to communicate with users, and fine-tuning your auto-responses all fall under this metric - and should be a key responsibility for the team.

However, based on AppFollow research, we discovered that the response is not the only factor that affects the Reply Effect metric. Other important aspects to consider are:

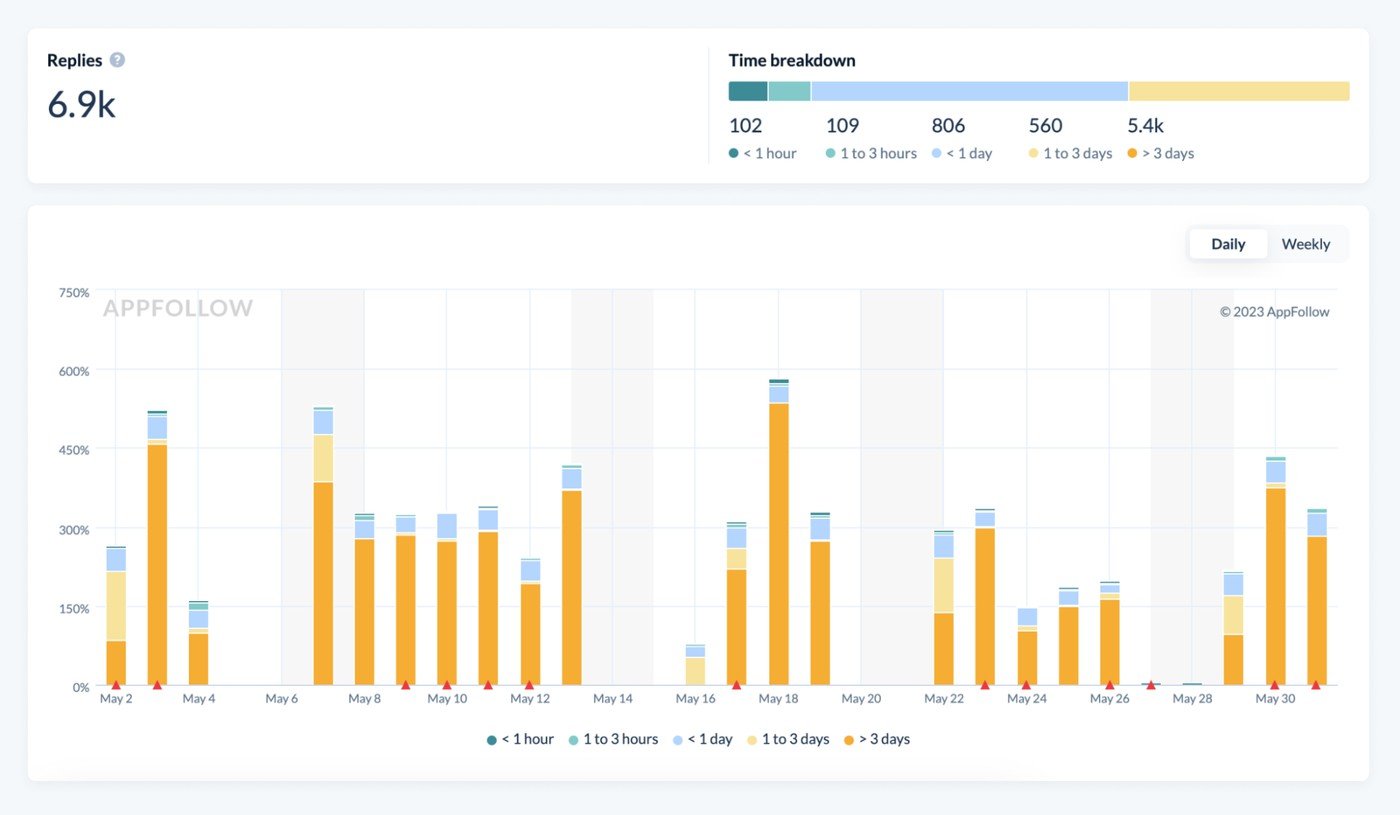

- Response speed

- Response text / template selection accuracy

The Support team’s response speed is important because feedback in the app stores is very different from the standard live support chat process, where users can communicate with support agents in real-time. A user can quickly forget that they’ve left feedback in the app stores, so it's important to quickly "reach" them while the topic is still relevant. Our recommended speed of response to feedback on both platforms ranges from 1 to 3 hours. However, the quicker the better - responding within the first hour hugely increases the probability of communicating with the user directly and having them update their review.

Important: the response and the review itself are posted in app stores with a delay that can last up to a day. In the AppFollow dashboard, our metrics are based on when the response to the review was sent - not its actual publication date.

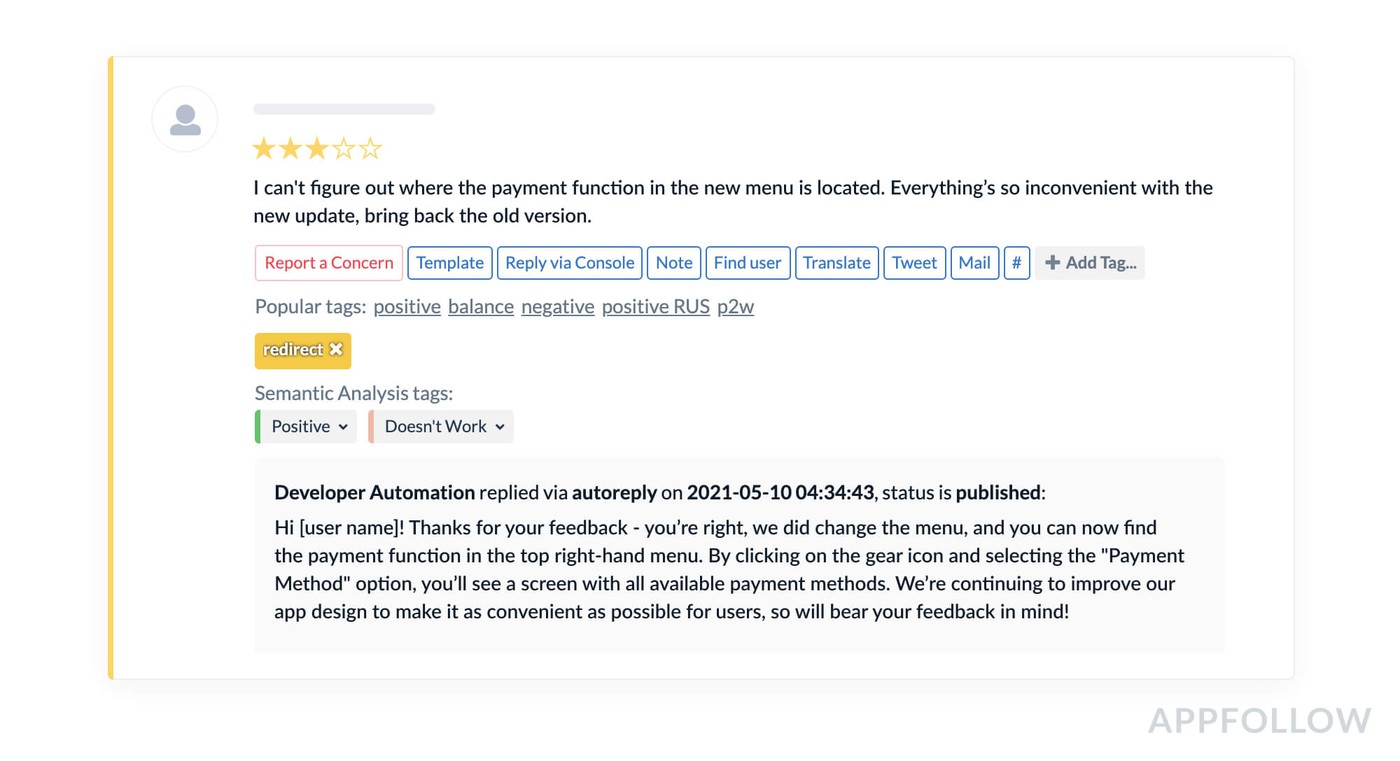

The response template, or the answer itself, is naturally one of the key things that affect the rating change. Where possible, always address the user’s question or problem in the response itself, rather than sending them to your help center or asking them to email your support address. As an example, let’s look at a UX-related issue:

For example:

By answering promptly, precisely, and quickly, you’ll be able to positively influence review score changes.

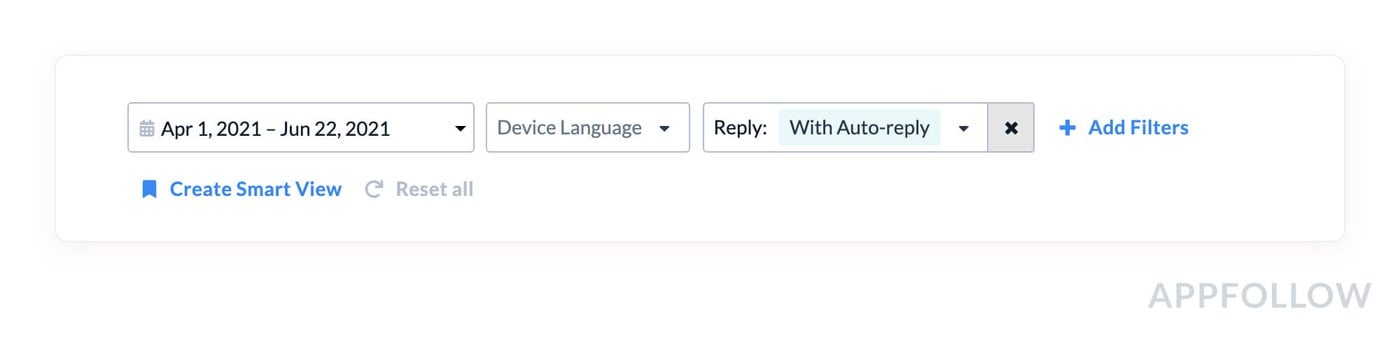

Setting up and triggering accurate auto-answers is another important task for Support teams. If a user receives an automated answer irrelevant to their question, they can be left feeling frustrated - and there’s no chance of them improving their rating. That's why we always recommend checking the auto-response settings after launch to ensure adequate topic accuracy. This can be done through the filter below:

You should now have a firm understanding of the different elements that affect the Reply Effect metric, which contributes to the everchanging Average Ratings by Reviews, which in turn affects the final and desired Total Average Rating metric. Your KPIs will change depending on review volume, Support team structure, and product features - but by breaking down the work that contributes to your Total Average Rating, you’ll be able to redistribute your Support team’s workload and focus on what's important at any given moment.

Now that you know what KPIs to track as a team, let’s look into how you can evaluate every team member’s performance - and make sure you’re challenging and supporting them in equal measure.

Evaluating Agent Performance

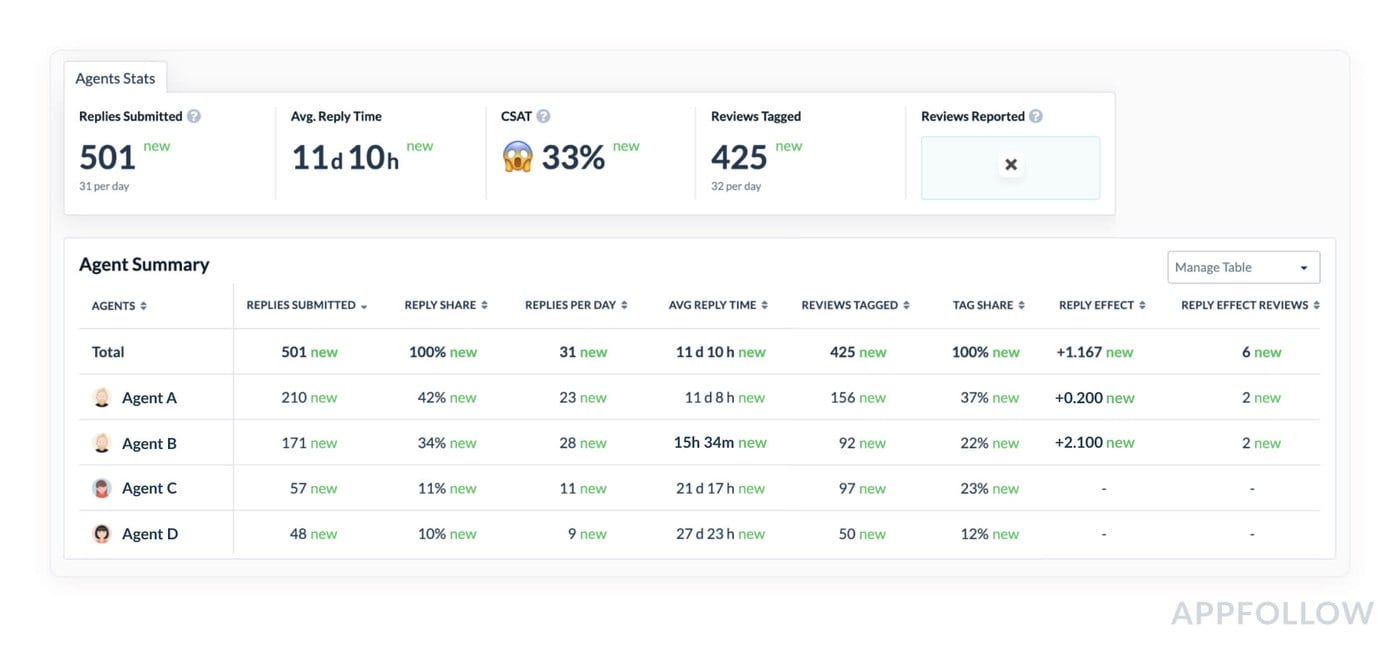

If your Support team consists of a number of agents or managers, breaking down overall results per employee will help you identify growth areas. Let's look at the example below.

Game Application X’s Support team consists of four agents. The data below, gathered with AppFollow’s Agent Performance tool, shows how effectively each agent is working - or who needs a boost to improve performance.

Agent A submitted the highest number of responses (210), which is almost half of the work of the entire team (42%). However, his average reply time is significantly higher than Agent B's (a difference of 11 days). This could be the reason for the 1.900 point difference in Reply Effect in favor of Agent B. Agents C and D responded to a smaller number of responses and took the most amount of time to do so - and there is so far no effect seen from their replies. We can see that Agent B is the most effective at this stage in terms of the Quality approach, and Agent A leads on Quantity.

In this case, response times are longer than expected. This could be because the team has just started to work with reviews, and managers are still working their way through older reviews. As a result, the average response speed slows down.

Overall, researching and team performance is key to meeting your team’s overall KPIs. Knowledge sharing is also important, as this will help your team identify best practices and what tactics to avoid.

Now that we’ve come to the end of the Academy, we've learned that:

- An app's average rating has a significant impact on the overall performance of apps in the app stores, and you should continuously work on improving it,

- Many metrics (Av. Rating by reviews, Reply Effect, etc.) can become intermediate indicators and KPIs when dealing with user reviews. These will help you focus on your product’s critical at certain points throughout its development,

- Automating review management can significantly reduce your support team’s workload and increase its efficiency, and as a result, drive down operational costs,

- There are a number of methods and techniques that can be used to deal with critical, negative, featured, multilingual, and positive feedback, and for almost any situation, there is a chance for the developer to reply in a way that will positively impact your rating and brand image.

Working with user reviews is an integral part of app management - helping you pinpoint issues and areas for improvement, giving you valuable insights into user personas, and, of course, supporting your product roadmap. And with AppFollow, you’ll have the tools to analyze your reviews quickly and efficiently.

We hope that you found some takeaways you can apply within your own mobile app, and if you’d like to hear more about how AppFollow can help increase your review management process, don’t hesitate to reach out to us for a demo here.

Wishing you success in your development,

Your AppFollow Team.