Managing Reviews / Lesson #4

The Ultimate Guide to Review Automation: How to Automate Replying to Reviews and Increase Your App Ratings

Intro

In the last article, we analyzed how your Support team can handle a rapidly-growing caseload. We discussed the importance of segmenting feedback, and how it can help you analyze reviews and easily gain insights from your users.

This time, it's time to dive into tagging and semantic division of reviews, which will help you build an auto-reply system suited for your needs. To kick-off, let’s look at another common issue faced by developers:

The use case

Before we move on to the practical tips and the setup itself, you need to ask yourself a crucial question - do you need to automate responses to reviews? If you answer “yes” to any of the below, it’s most likely time to start automating replies:

- Is your business growing and scaling fast?

- Are your Support team’s resources stretched thin (by task volume, languages, etc)?

- Do you want to optimize your work (time/cost)?

- Are you aiming to shorten response time and increase Reply Rate?

Some app developers rightfully say that using canned responses can degrade the user experience provided by the support team, thus negatively impacting review rating updates.

Simply put, if a user receives a visibly canned review response, they may react negatively - frustrated that they’ve received a “robot” response instead of a human one. But this only happens if you’re using a substandard approach to automation, and there are plenty of ways to make even the most routine answers look natural. We’ll elaborate on these further in our article.

What review responses should you automate?

First, it’s important to understand that we will be talking about one of the possible options for tailoring the auto-replies process. The approach you take, or your exact workflow, can change depending on the project at hand and the structure of your Support team.

Review categories qualifying for automation should more or less match your tags list. The first and second line of support reviews can often be automated entirely because these types of reviews include repetitive topics and problems that don’t require technical support, offensive/spam reviews, or reviews with a high rating.

The third line of support automation can help to promptly transfer feedback to your Technical Support team. Depending on the helpdesk of your choice (such as ZenDesk, Helpshift, etc.) and with the help of AppFollow’s integration setup, you can mark rules for these types of reviews - so all relevant feedback will be sent directly to the right team to resolve the issue.

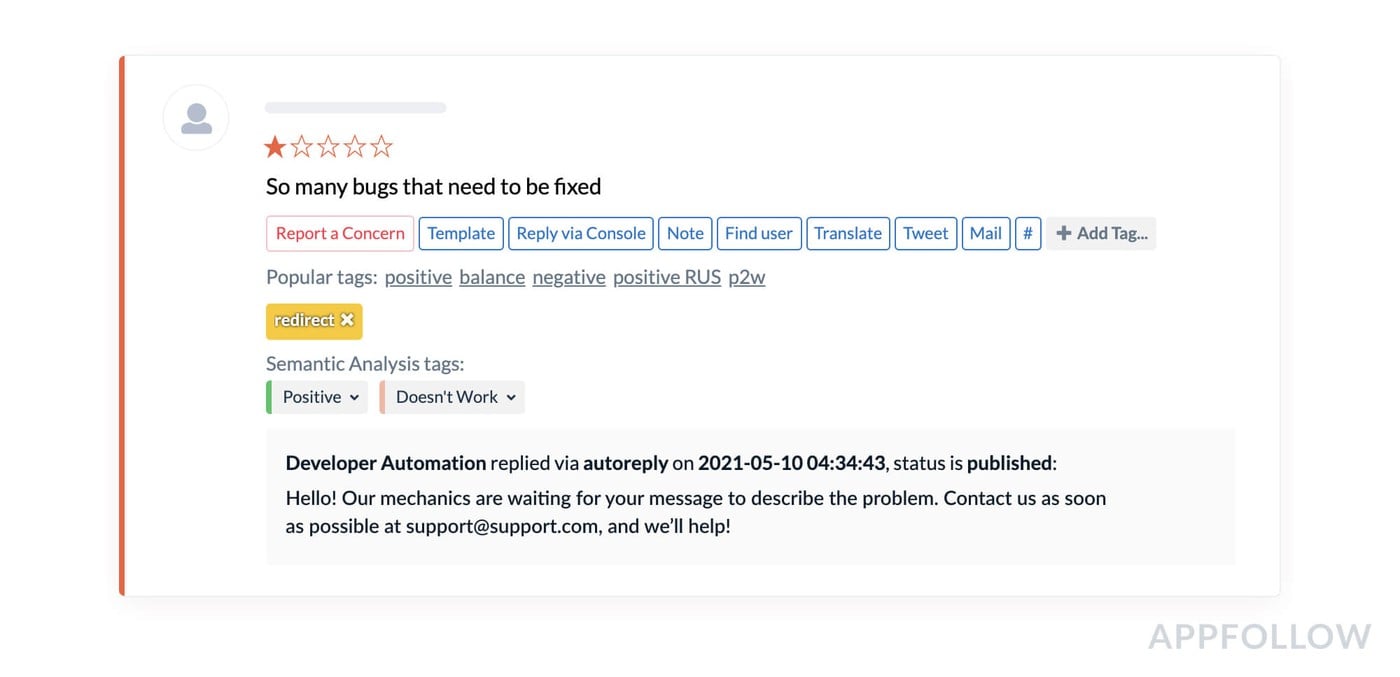

Another commonly automated reply is when you would like to direct users to hotline support, or to an existing community site or help center. This is especially common for e-commerce and gaming apps. Responses to this kind of review can be automated with a single template:

However, how effective this tactic is remained up for debate. More specifically, will these users who have navigated away from the app store return to change their review, once their problem is solved? There is no guarantee and no detailed statistics about how likely this is to happen.

Each project will have relatively unique statistics, taking into account the problem resolution speed and the quality of further work on the issue. The advantages of this approach are: firstly, you don’t ignore the user's feedback (a customer-oriented approach), and secondly, you increase the likelihood of a change in the user's rating for the better by at least 50%.

Now that we’ve outlined several ways of segmenting reviews, let's move on to some practical advice on how to work with auto-replies.

Review automation best practices

Part 1. Negative reviews

Negative reviews can be frustrating for any product, particularly when the feedback isn’t constructive. Regardless, these reviews shouldn’t be ignored:

- You may find important insights on the stability and quality of your product (see detailed examples in the "Friday Update" article and learn how to work with feedback spikes)

- Working with this review cohort can lead to the highest increase in average rating growth and associated metrics (more on this in the next articles of the academy)

When it comes to dealing with negative reviews, each vertical - whether gaming, food, children’s apps or banking - will have its own nuances. However, the approach remains similar - and we’ve unified our negative review management tactics to provide practical and valuable advice that any product out there can implement and benefit from.

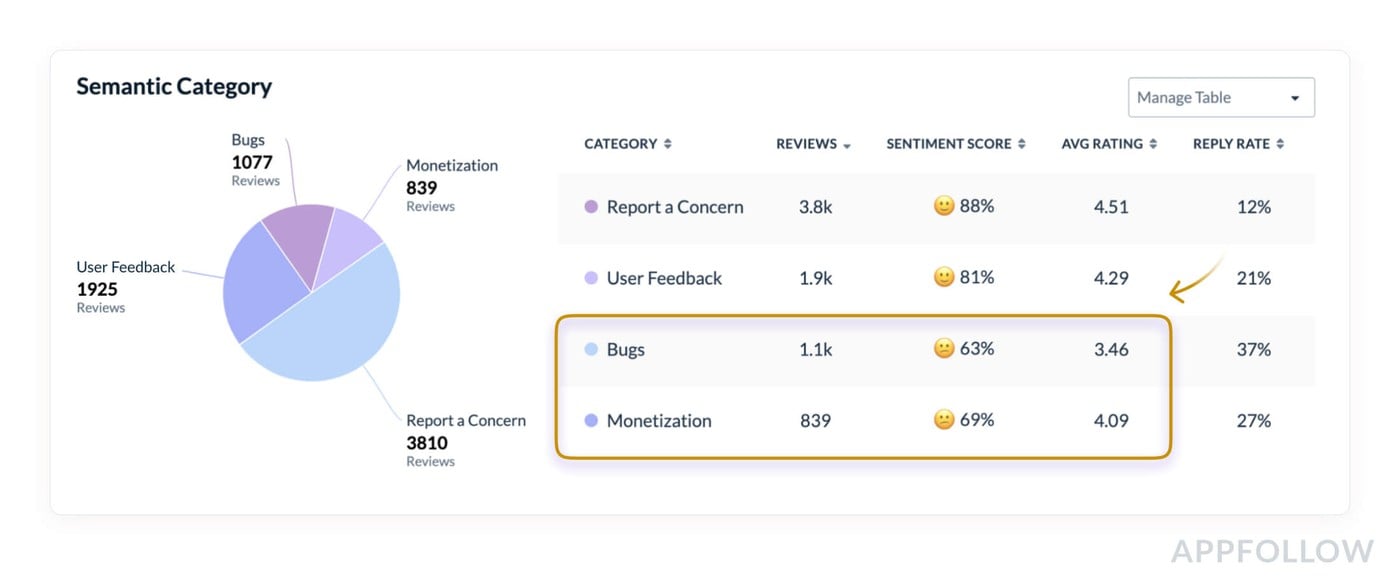

Strategy 1: Making use of semantic analysis and/or the tag list

Negative reviews (or crucial reviews) are those rated 1-3 stars. Among the most common types of negative reviews in mobile products are bugs and monetization problems, as highlighted in the example below. The fastest way to see what’s driving poorly-rated reviews in your own app is to use AppFollow’s semantic analysis. From there, you’ll be able to identify topics by average rating and sentiment score.

From there, you can divide these topics into subtopics for more detailed insights (you can find more information on this in our Semantic Analysis part), define your response strategy, prepare reply templates and begin the setup process.

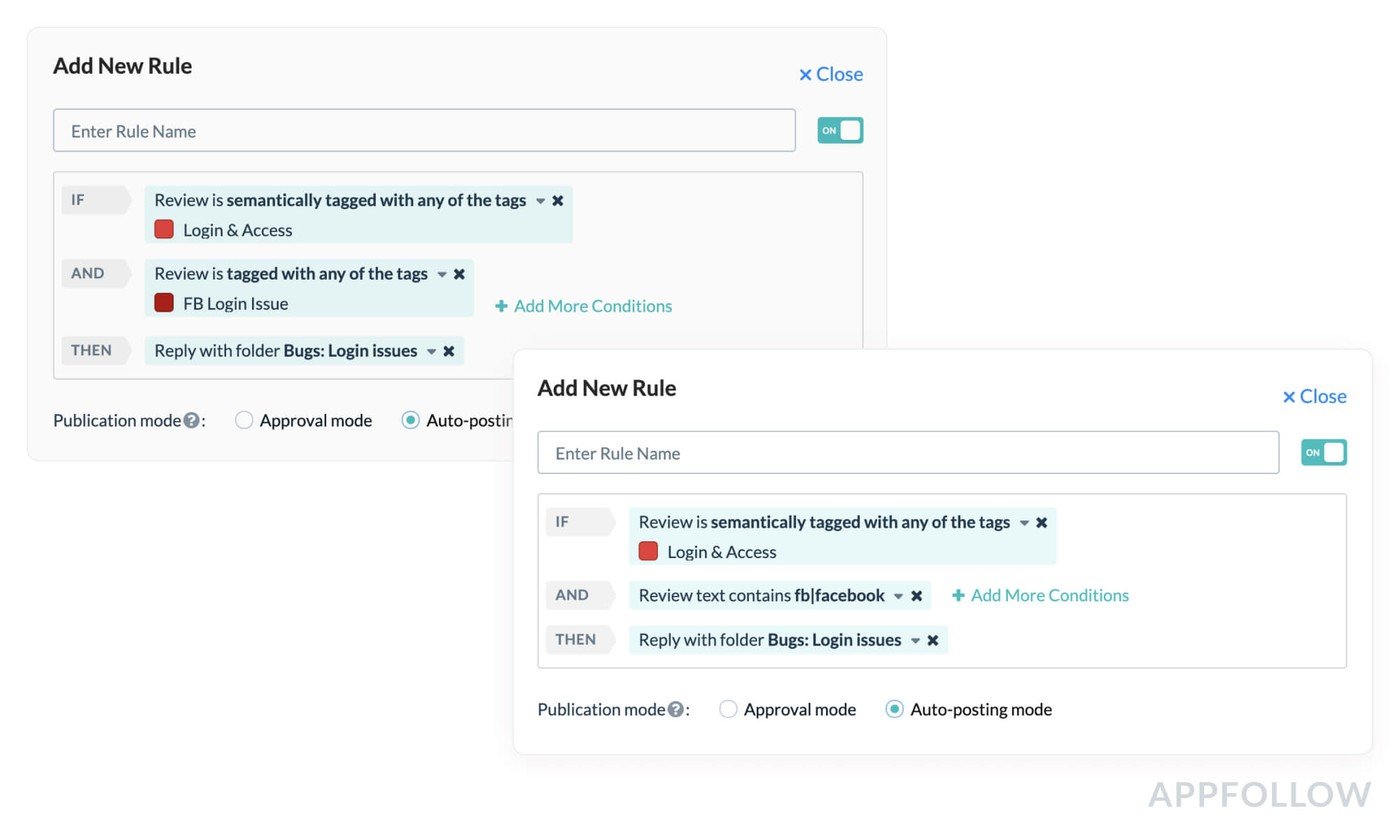

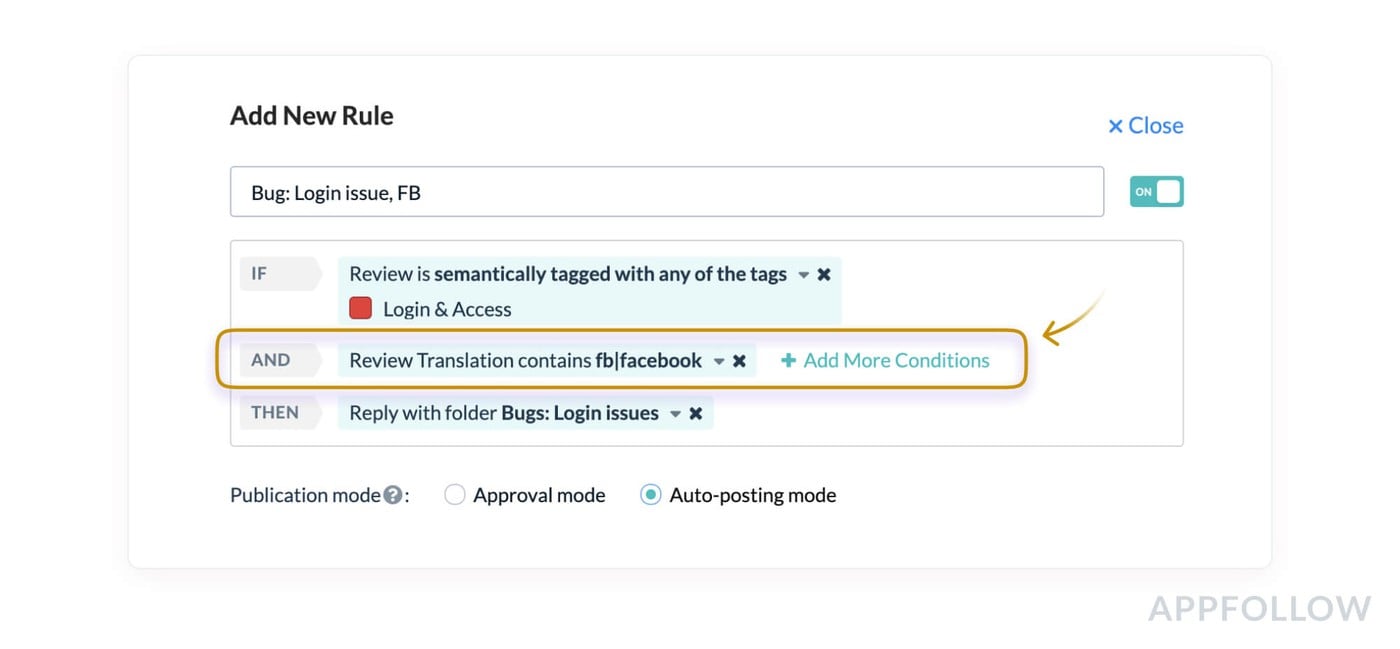

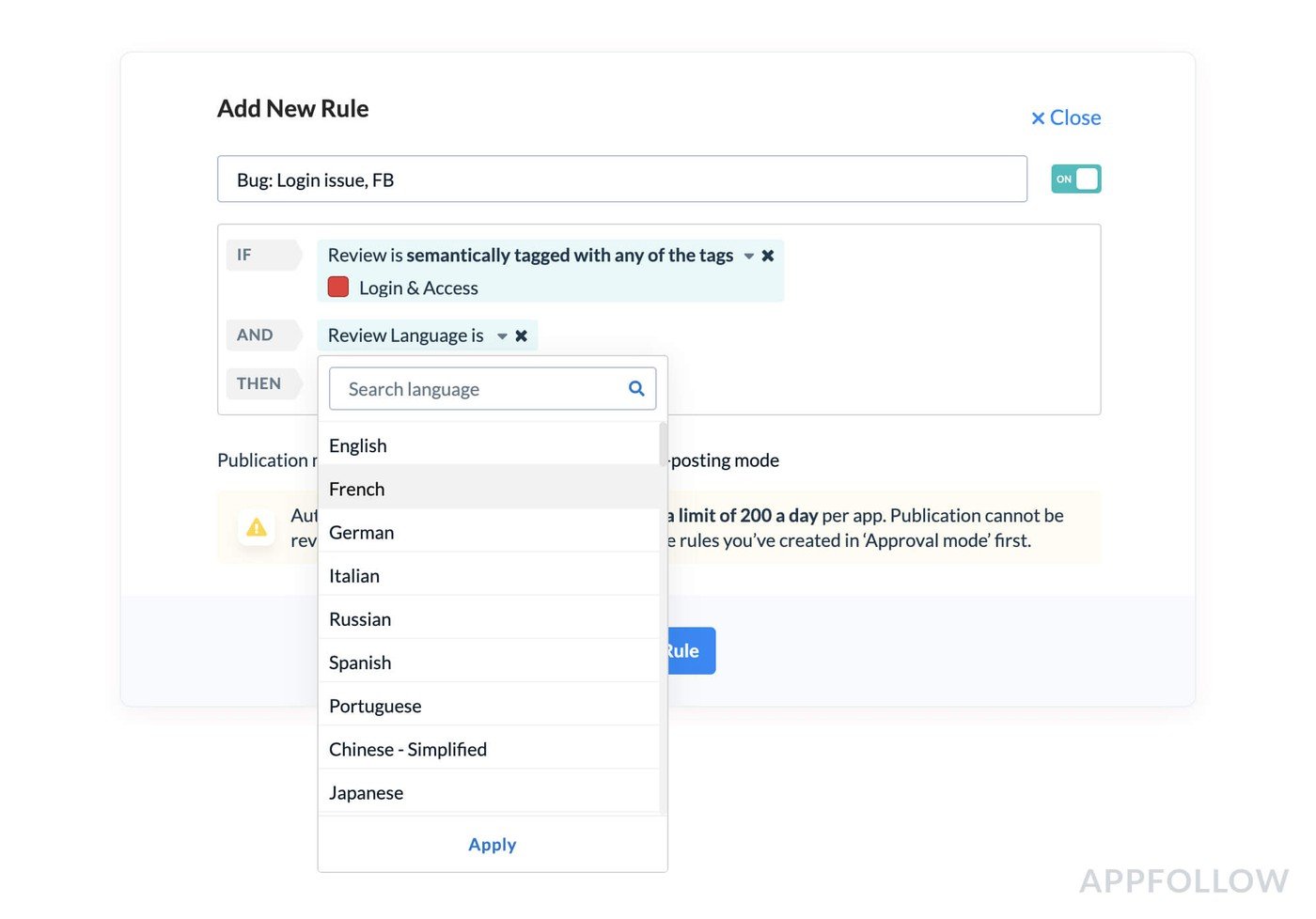

Considering how many subtopics there may be within each issue, we highly recommend making your responses more personalized. You can do this either with custom tagging, as discussed in our previous Academy article (left screenshot, tag: FB Login issue), or additional segmentation by using keywords found in the review (screenshot on the right). In the example below, we’re addressing users specifically with app login issues.

Creating multiple response templates for the same topic is another best practice. Not only will you be varying your responses and avoiding repetition, but you’ll also be able to test and find the most well-received answers.

Strategy 2: Custom rules

Semantic analysis and tagging are important ways to help you analyze a large amount of user feedback. However, it’s not the only way to create an automated review management system - you can create your own unique response strategy with the help of manual analysis. This is a great approach when you have relatively few incoming reviews, usually the case for new and growing projects.

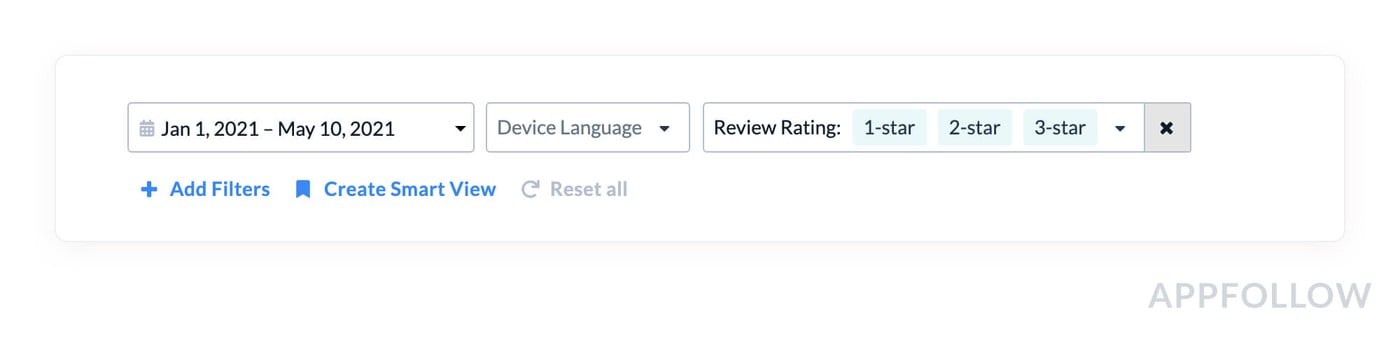

As mentioned above, a positive update of negative (1-3 star) reviews can significantly affect the overall rating - so taking a personalized approach to this cohort is crucial. By selecting a 1-3 star filter in the “Reply to reviews” section, you can manually analyze reviews and identify users’ main topics.

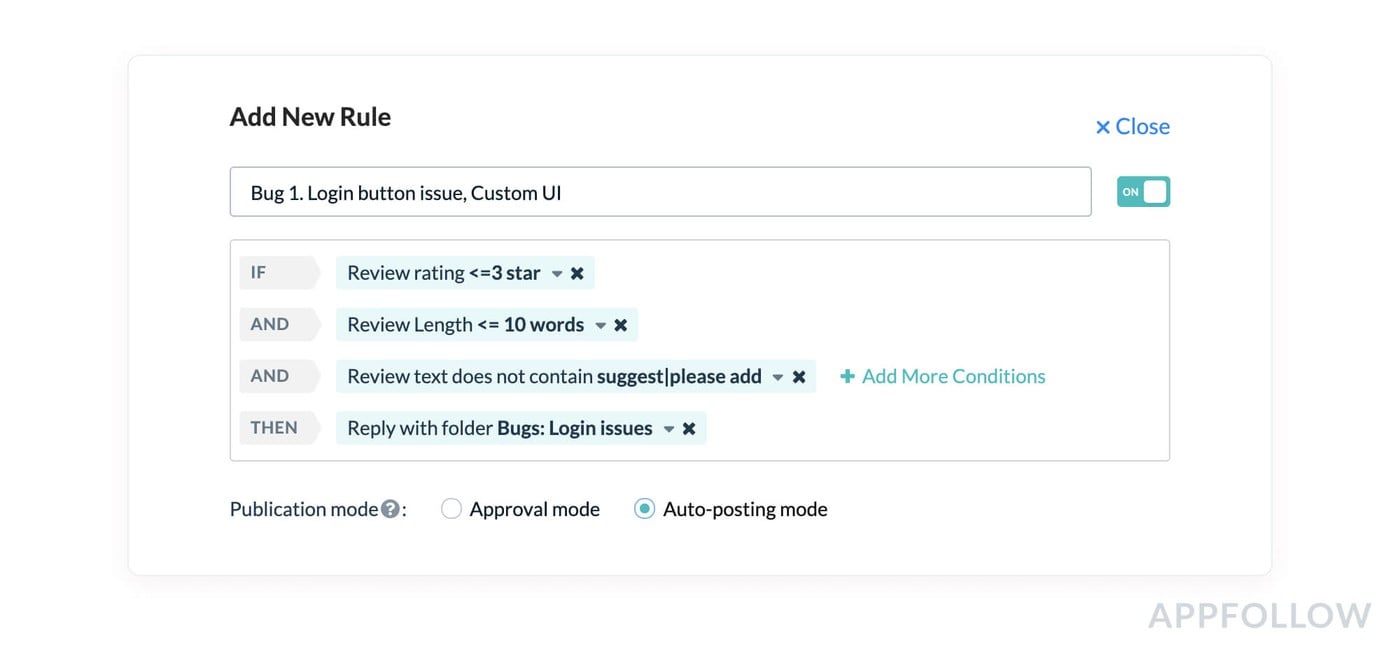

After defining the cohorts, and understanding the specifics of each one, you can add these settings to your rules using various filters and narrowing them down even further.

Don’t forget about the mutually exclusive filters, as otherwise, you might miss important insights about your product. In the example above, we excluded reviews that contain suggestions (“suggest”, “please add”) and limited the review to 10 words. Long reviews can be subject to additional analysis. Given the amount of information in the review, the user can share valuable feedback and insights on using the product.

Using this approach makes your answers more specific. You can also combine and use both strategies together at different stages of product development.

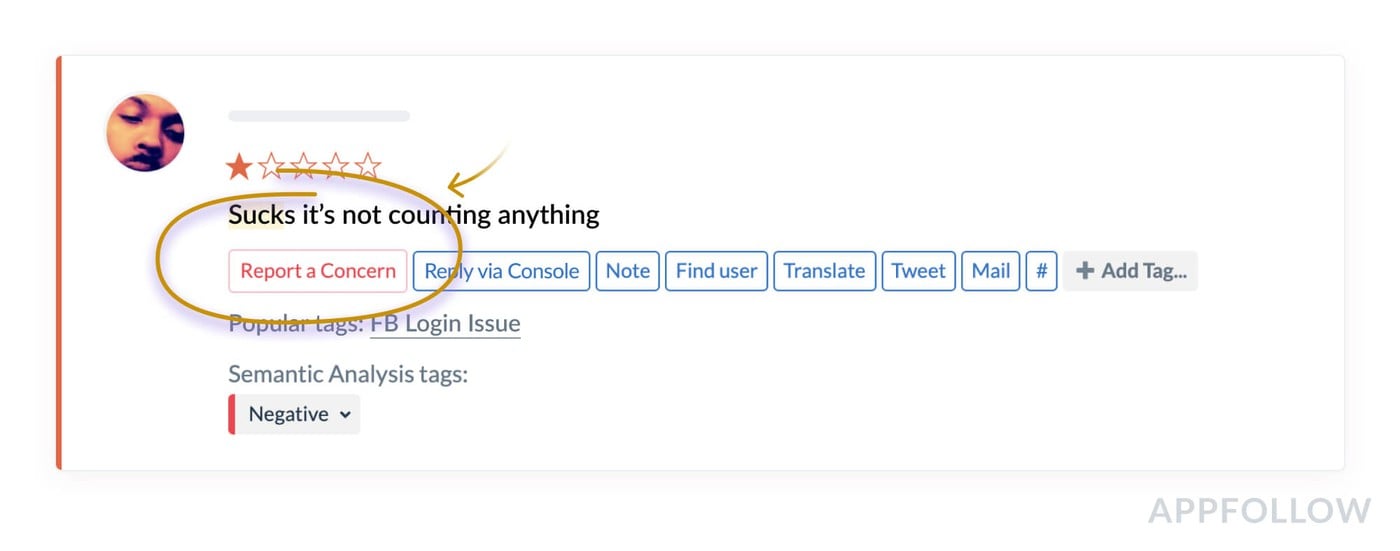

Before finishing up this section on negative reviews, there’s one more category to bear in mind - what we call “bad mood” reviews. These are often short (1-2 words), non-constructive comments, are emotionally charged and do not carry a semantic load whatsoever. In 80% of cases, these reviews may violate store policies - so you can also flag them for removal, as in the section below.

Part 2. Reviews that fit the “Report a Concern” criteria

According to Apple's official Ratings, Reviews and Responses, and Google Play's General Policy, there are four types of reviews that violate store policies, namely:

- Offensive and aggressive reviews (foul language, threats to the developer, etc)

- Spam reviews/spam attacks

- Reviews that are irrelevant to the product (this is common for e-commerce apps, where users leave negative reviews due to dissatisfaction with delivery or the product. The app itself, however, is not at fault).

- Inappropriate reviews (not an official category that partially falls under the third criteria, but we separate it regardless. If the review contains just one letter, a single emoji, or just a symbol, we recommend trying to remove it).

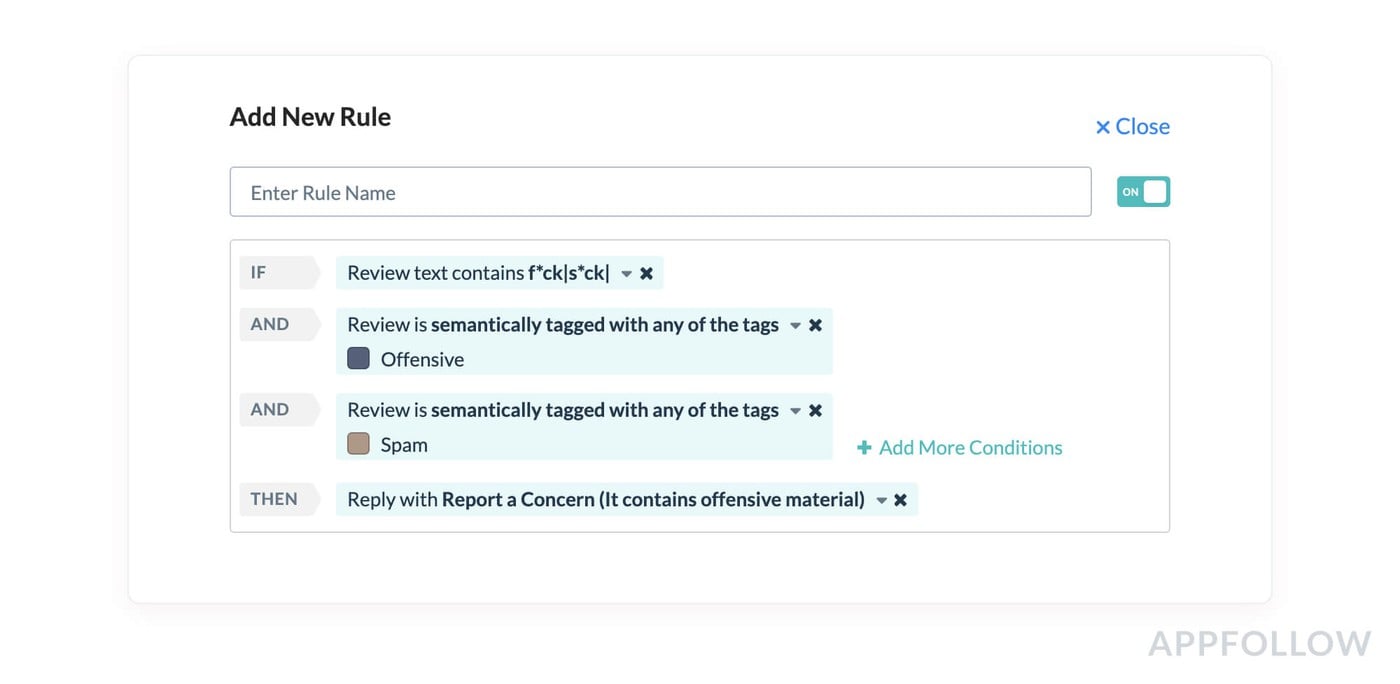

The process for reporting these kinds of reviews is simple. While setting up the required filter/tag for each cohort, AppFollow also offers a “Report a concern” function, as seen in the example below.

It’s also possible to set up an automatic rule to flag these reviews for removal. The rule can be based on semantic tags which, with the help of machine learning, AppFollow utilizes to add reviews according to the criteria above or the key queries located in the review text:

Do note: to increase the likelihood of a positive complaint resolution, AppFollow has a default limit on the number of reports you can submit per day. The remaining requests are queued and sent the next day. A more detailed guide can be found here.

Part 3. Reviews with repetitive topics

This is another helpful use case for semantic analysis or customized auto-tags. In the same way as working with negative reviews, you can highlight common themes by using semantics, custom tags, and/or key queries and set up appropriate rules.

This will help you make better use of templates and promptly get back to users who experience the same kind of problem. Remember, the more templates, the better. This will help vary responses and make your replies seem more individual.

Part 4. “Thank you” reviews

Many developers wonder if it’s worth responding to 4- and 5-star reviews if they don't meet the criteria above. According to internal research conducted by AppFollow, positive reviews without a developer response can, on average, lose up to two points in rating, while a decrease in the review rating for a review with a response is around 0.6 points. Thanking your users for their good feedback is also key to increasing user loyalty.

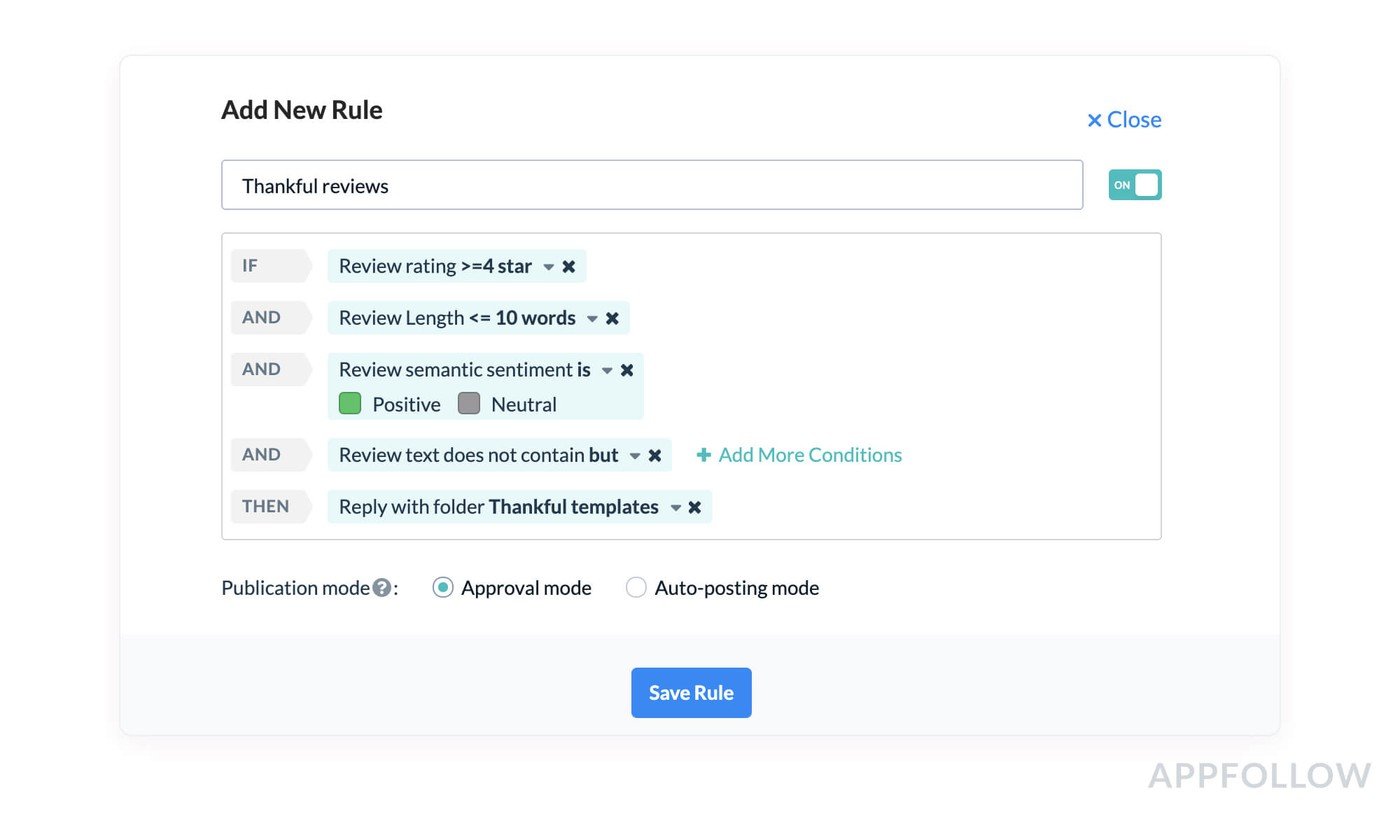

Above is one such example of how you could set up this type of auto-response. We recommend setting “exclusion” filters based on the length of the review and the review content to “but” (example: “Your app is cool, but it would be great to add a feature that xyz...”).

Thank your users for the feedback, they will be pleased.

Part 5: Working with multilingual reviews

Lastly, let’s take a look at an important question for many app developers: how can you offer multilingual support without a native speaker in your team?

You have two strategies, depending on whether your team has the resources to translate response templates, or not.

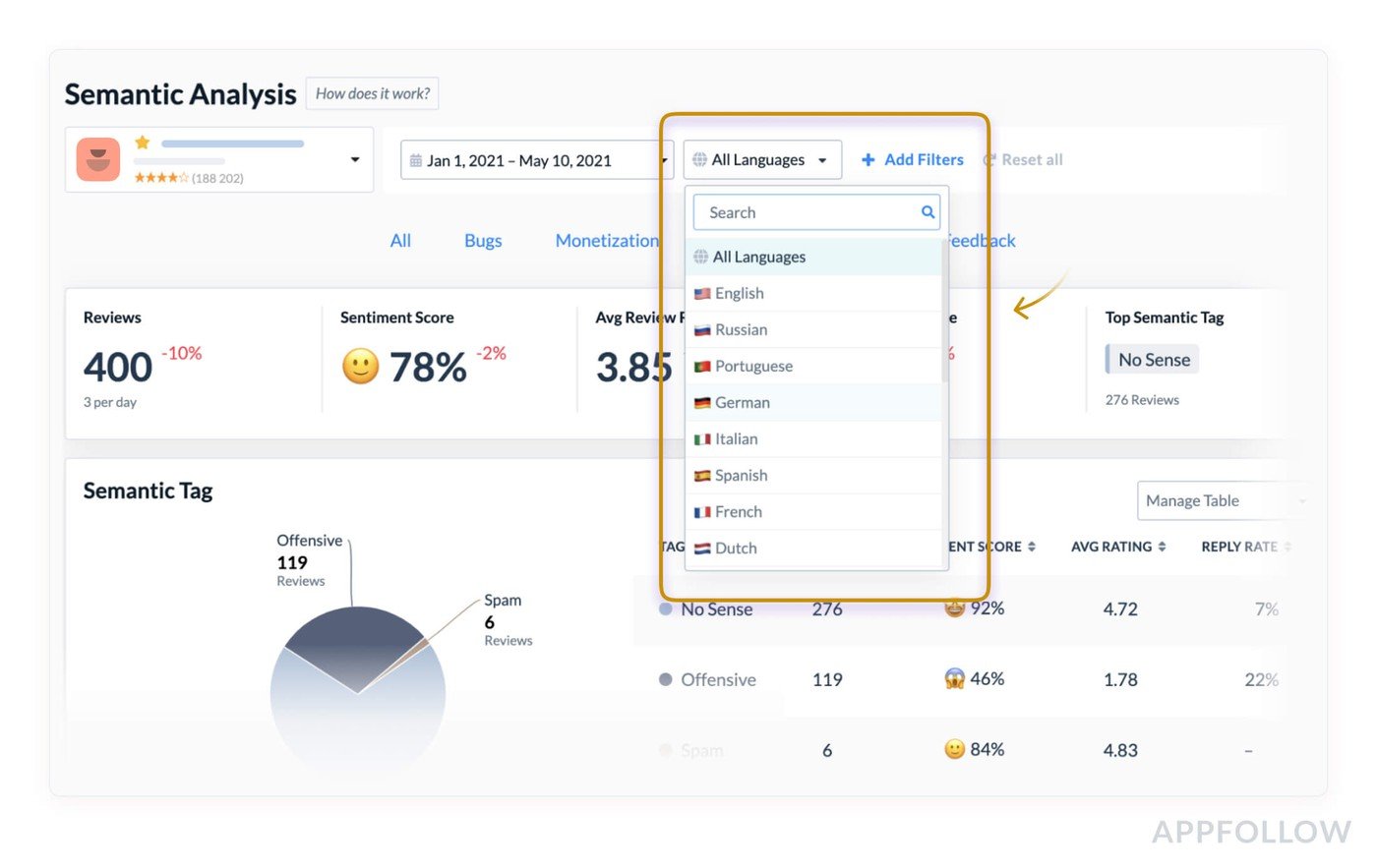

When managing multilingual reviews, you can follow many of the tactics described earlier in the piece, with one condition - you need to add a translation/language rule. Semantic Analysis in AppFollow already supports this feature, allowing you to filter semantics for all necessary languages and manage each chosen cohort separately.

You can add an additional “Review Translation” filter to ensure the rules for auto-tagging and auto-replies you have configured cover all languages (as in the screenshot below). With this setting, the AppFollow algorithm will automatically translate all reviews, and the reviews containing the specified requests in any language will fall under the configured rules (for example, the Arabic version of the word “facebook” - ”فيس بوك“).

If you have the resources to translate basic templates, you should add the automation rule under the criterion “Review Language is” and the corresponding folder with localized response templates. We recommend doing this particularly for regions where not as many users speak English, or where English responses are perceived badly, such as Asia, the Arabic regions, Spanish-, Portuguese-, and Russian-speaking countries. Implementing a multilingual review strategy and segmentation will pay dividends, and will further boost your metrics in these regions.

Automation is the new black

To summarize this Academy article, let’s look at the takeaways: why do we need to optimize the review automation in the app stores?

Automation helps to:

- Reduce overall strain on your Support team

- Improve the effectiveness of your Support team

- Increase response speed

- Reduce the number of spammy reviews

- Gain product insights and implement them swiftly

We also have a detailed guide on setting up automation - be sure to give it a read here.

In the next article, we'll talk about automation in numbers. You will learn the positive effect of automation, and what metrics and KPIs to track the process.