Managing Reviews / Lesson #2

‘Friday Update’: Working with app reviews during ticket traffic spikes

Intro

There’s no straightforward way to work with user feedback, and every brand out there has its workflows and processes for review management. This usually depends on a host of other factors, such as market vertical or product - and each will have its criteria and rules that affect the tone of voice, response structure, and even the depth of technical information in the message body.

And why are app reviews (and replies) paramount to every brand? Think of it this way: the app stores are a public channel, and often your app page is the final touchpoint users will encounter before downloading your app. Your replies are visible to visitors who land on this page - not just to the original reviewer.

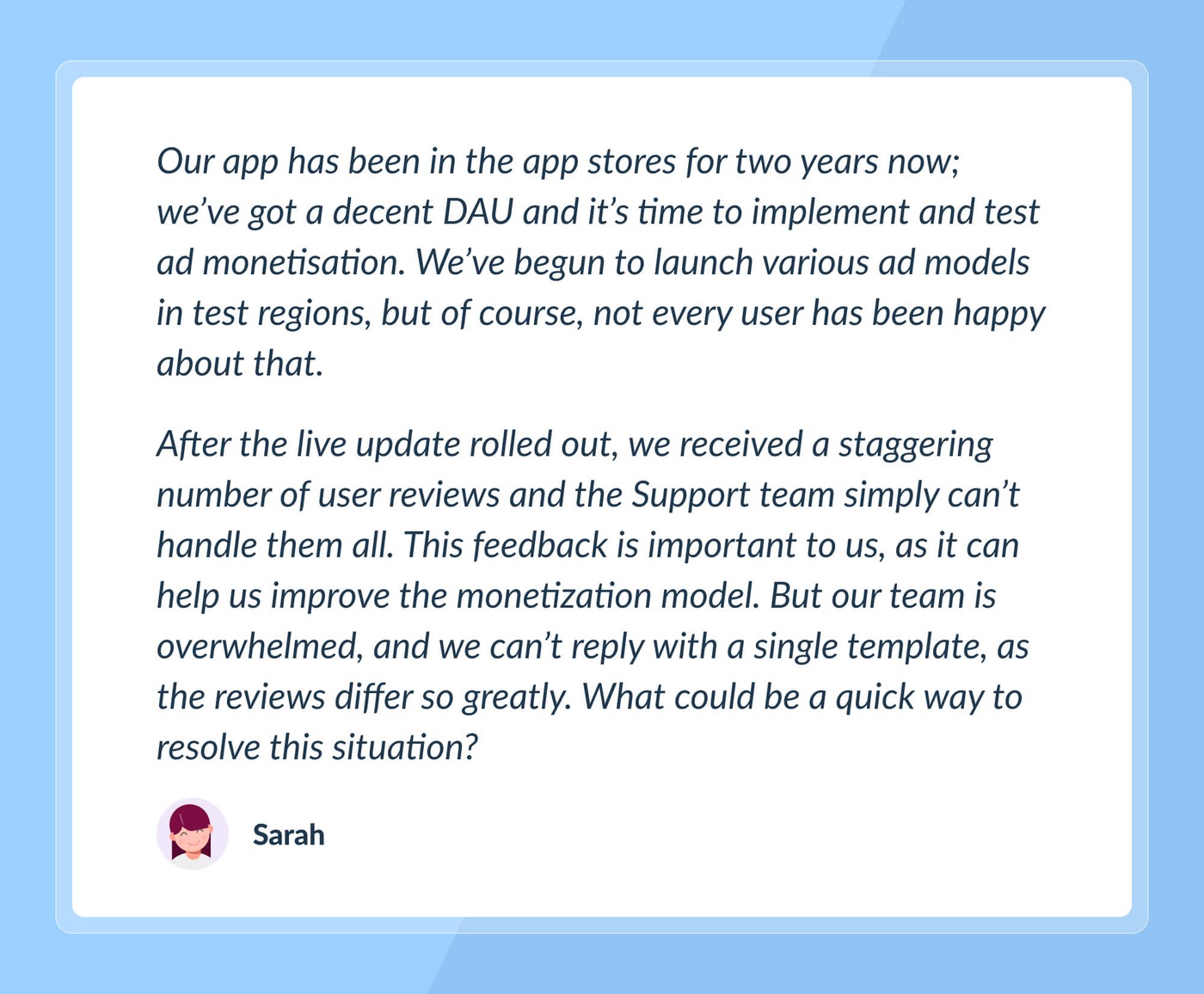

In this AppFollow Academy Article, we’ll look at how to work with reviews, specifically during ticket traffic spikes or high-stress situations. To start, let’s look at two popular use cases, both of which triggered developers to immediately begin working with reviews in situations such as a bugged update release, a new paid app version release, or interface changes.

First use case

These feedback spikes after an update rollout are a normal occurrence, but growing the size of your support team may not be the right answer here - in most cases, you can resolve the situation without investing in additional resources.

In the use case provided above, the app publisher is rightly choosing not to alienate their user base by sending a single review template to every review. This can frustrate users further and increase the risk of losing loyal consumers. These reviews are also a key source of useful information, often including feature requests, bug reports that could’ve been missed post-launch, or product improvement ideas (you can see more on performing insight research in the “Review semantic analysis” section of the Academy).

So, what can teams do when reviews are piling up but resources are limited?

Step 1: Determine the issue

The issue is straightforward in the use case above: users are frustrated by the publisher introducing in-app ads. However, the monetization model was implemented differently according to regions so developers could test and discern the best monetization strategy possible.

First, developers must segment the data and analyze it to see if every region has the same reaction.

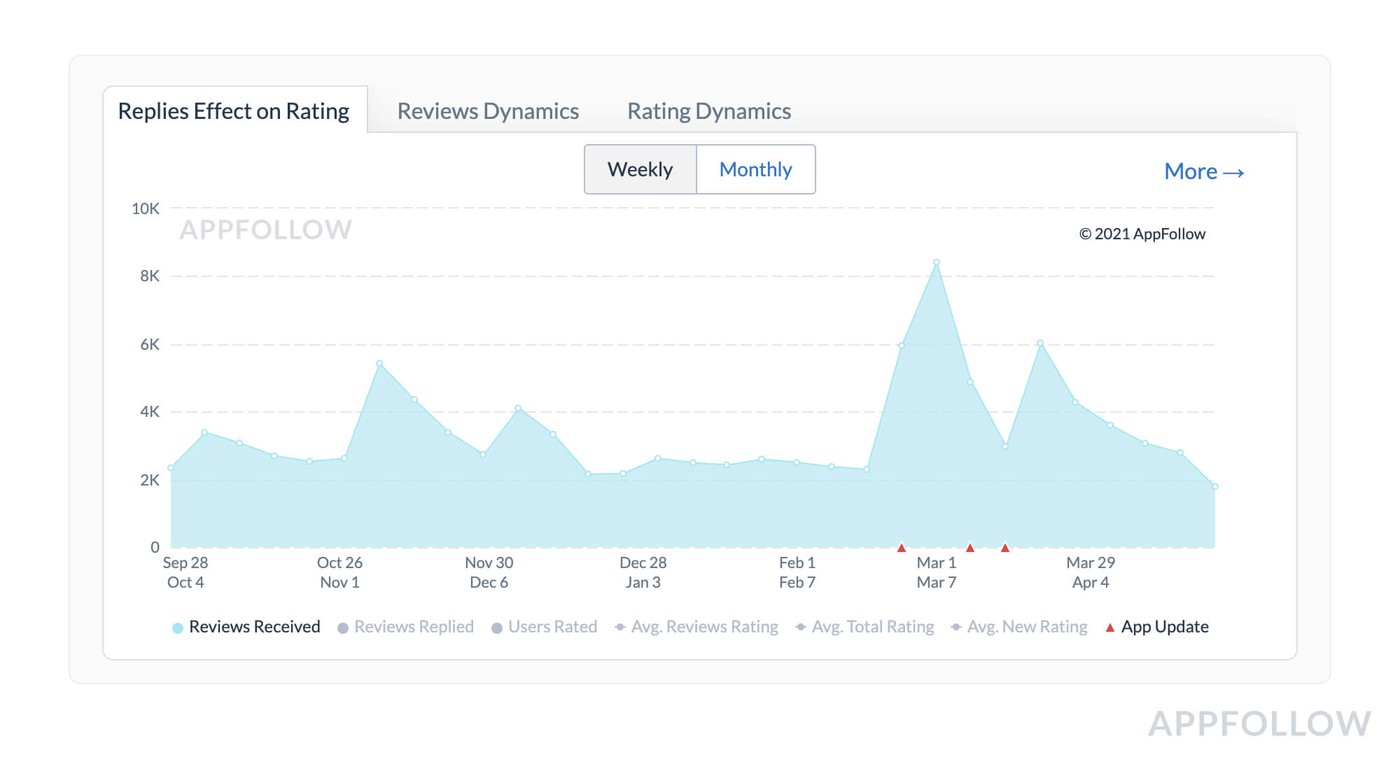

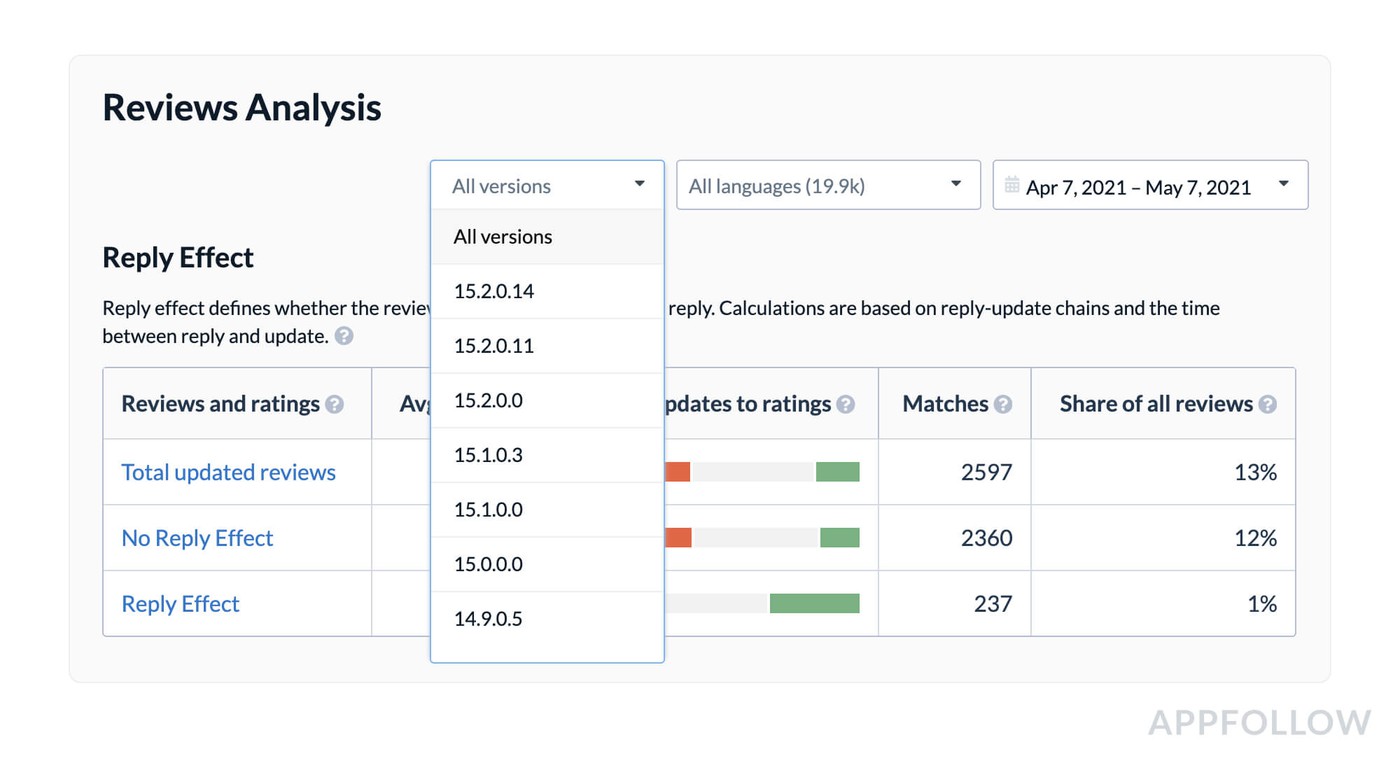

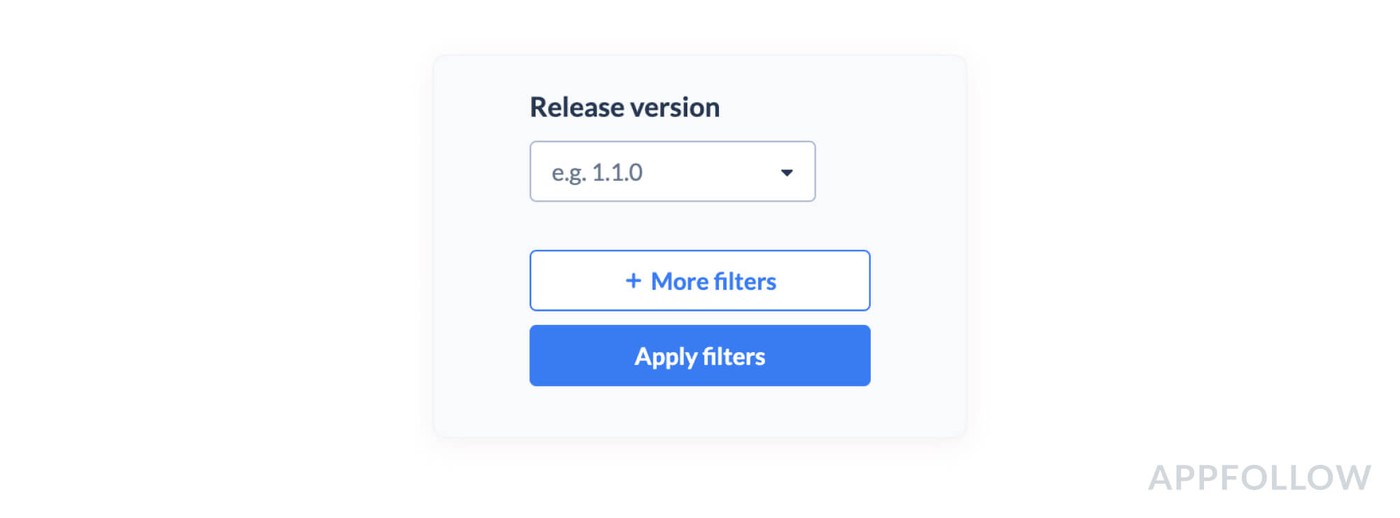

To do this on your AppFollow dashboard, navigate to the Review Analysis section, and then choose the right product version which features these new changes:

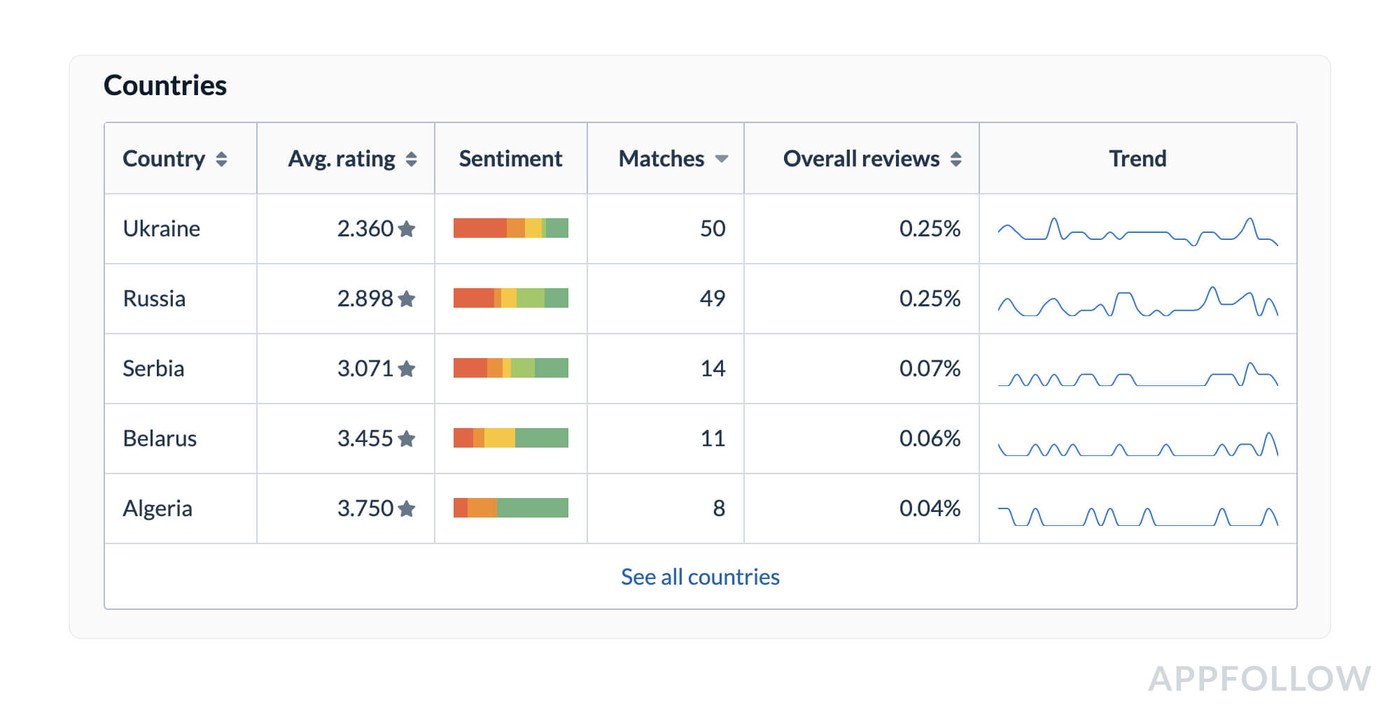

Then you can analyze statistics by country to get the insights which regions are more negative after these changes:

AppFollow offers another, more precise method available for you to analyze dynamics in selected regions. On the Ratings Chart, highlighted in the screenshot below, you can filter by country and see the big picture when it comes to the new rating post-update and not only reviews.

You can further segregate by reviews with text in them. In the first case, you’ll gain a bird's eye view of the situation, while in the second, you’ll receive more tangible insights about what exactly is frustrating your users. It is also important to watch the dynamics of ratings as a whole, not only the reaction to the last update, as users in certain regions may be more likely than others to leave critical reviews.

So far, we’ve learned that US users are more receptive to changes. What next?

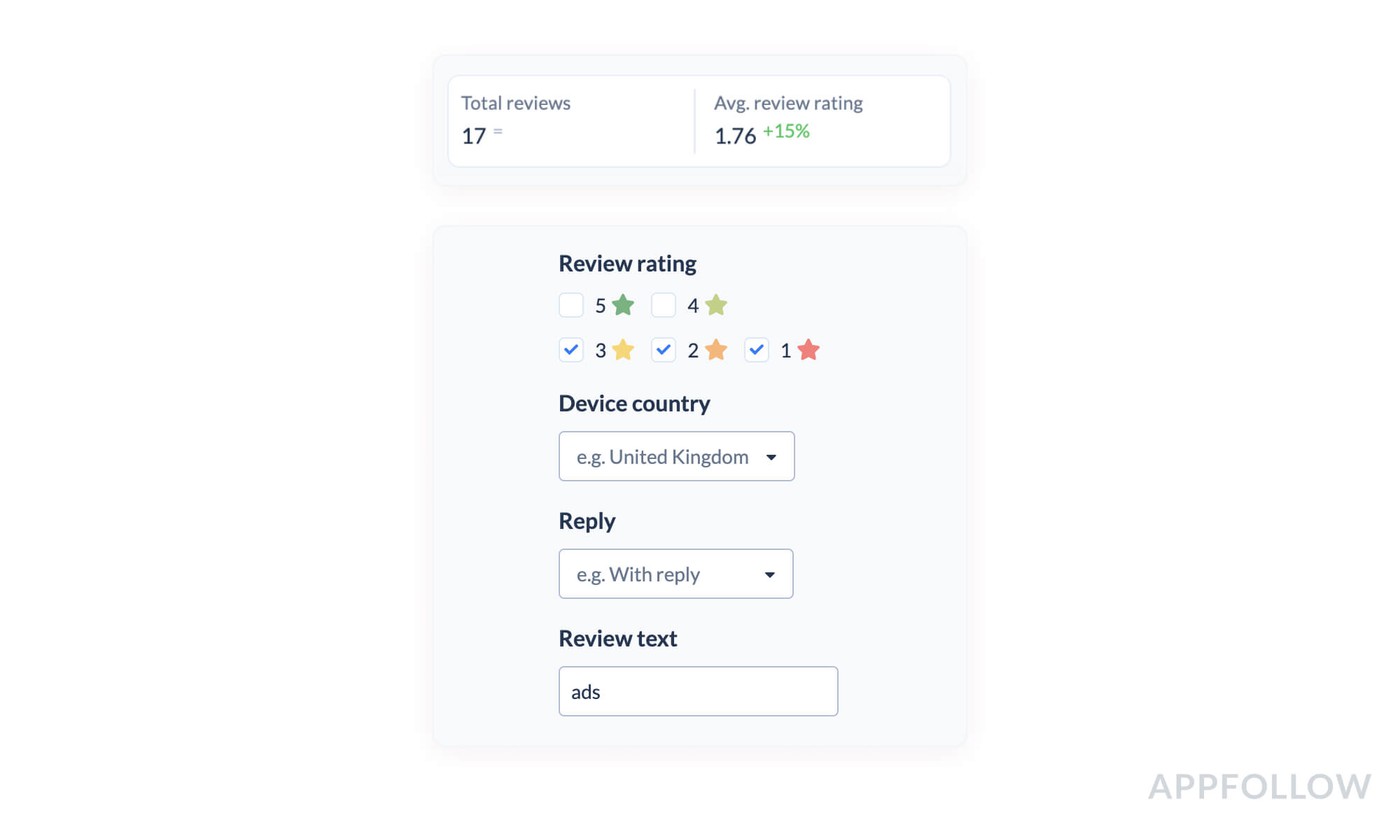

Once you’ve identified the critical regions - i.e., the ones with the highest percentage of 1-3 star reviews - we recommend performing a more detailed analysis of the contents of these reviews and bucketing them into separate topics. You can see the initial filter in the screenshot below.

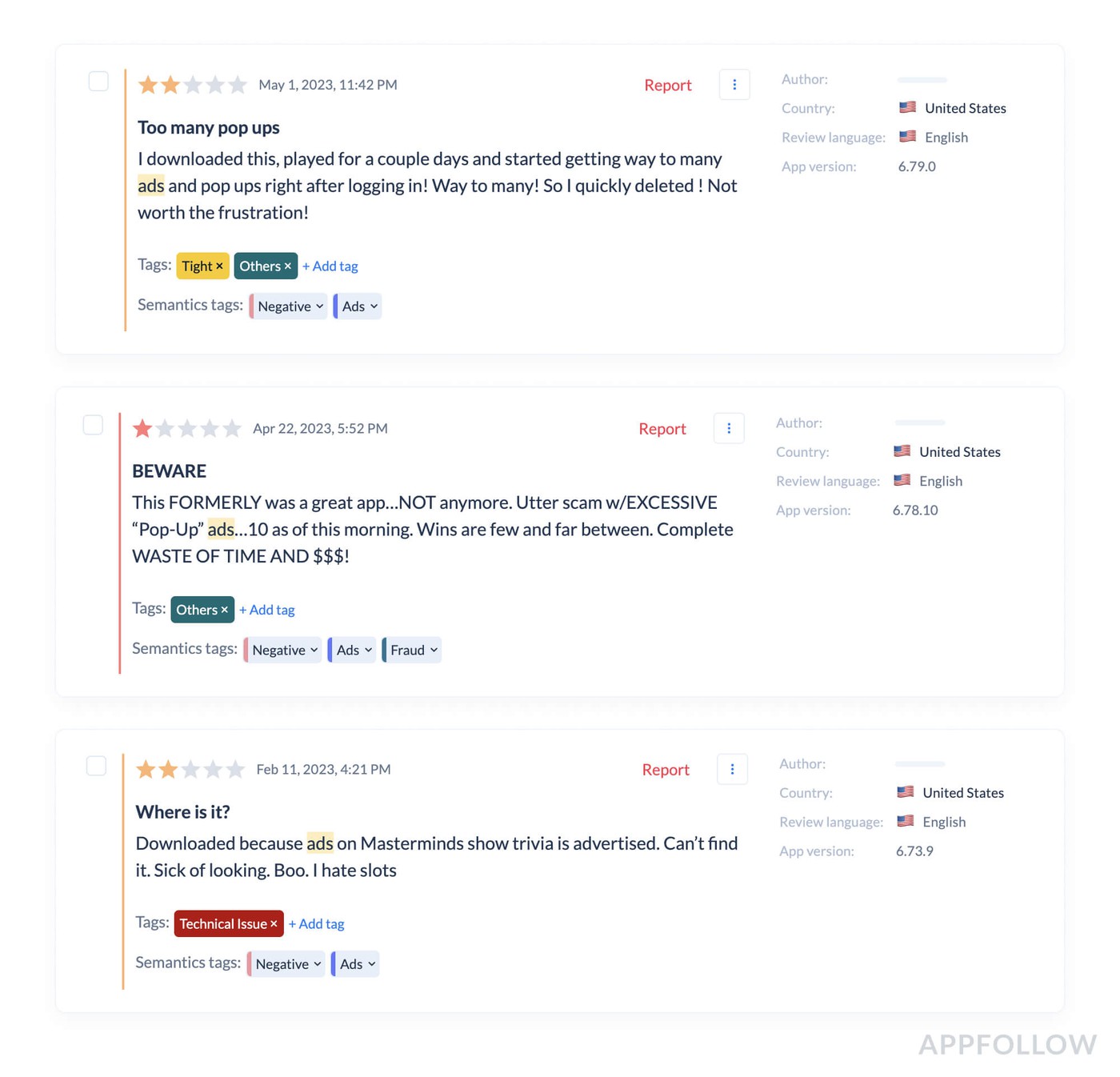

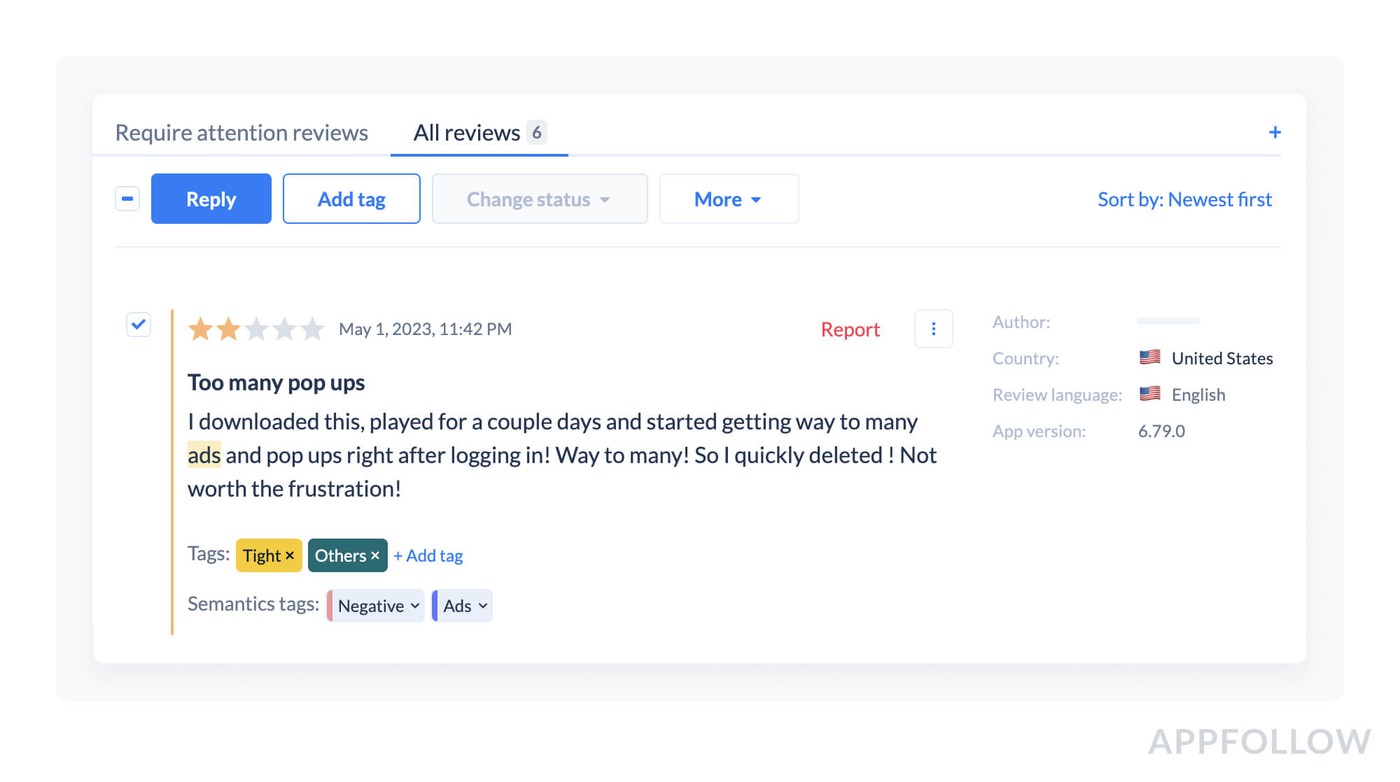

Briefly reviewing the feedback manually will allow you to segment different topics inside this big issue. Even in a use case as straightforward as monetization implementation, there will likely be a lot of nuances. Below, you can see various types of feedback that all fall under this wider umbrella:

These reviews cover three different types of user complaints, and all require a specific approach - a unified template response won’t work, and will only serve to further alienate users.

- Review #1 outlines the ad frequency. A possible solution here would be to lower the ad frequency.

- Review #2 states that ads prevent a user from making progress on the app. A potential solution could be to test new placement formats that don’t prevent user progress (e.g: rewarded videos).

- Review #3 relays that the ad content is poor. In this case, addressing the issue with your current ad mediation partner is important.

In all cases, the Support team will need to act as the first-line option here - but it’s also essential they must relay the information back to the marketing and product team to better understand user sentiment and potentially iterate on feedback for future versions of the app.

Now that the key issues have been determined, the marketing team works on the possible solution - but how can Support teams proactively reply to user feedback?

Step 2: Face the problem directly

In the section above, we saw that users outlined three main areas of concern. And while each product has its tone of voice when communicating with users, there are certain tips and tricks you can use to speed up the process of replying to users - specifically when it comes to the Support team’s heavily manual work.

Each concern, or user cohort, can be addressed separately using a review keyword filter and specifically designed templates. Each user cohort must get at least one template (although the more, the better) that directly addresses the review and utilizes the Support team’s damage control best practices.

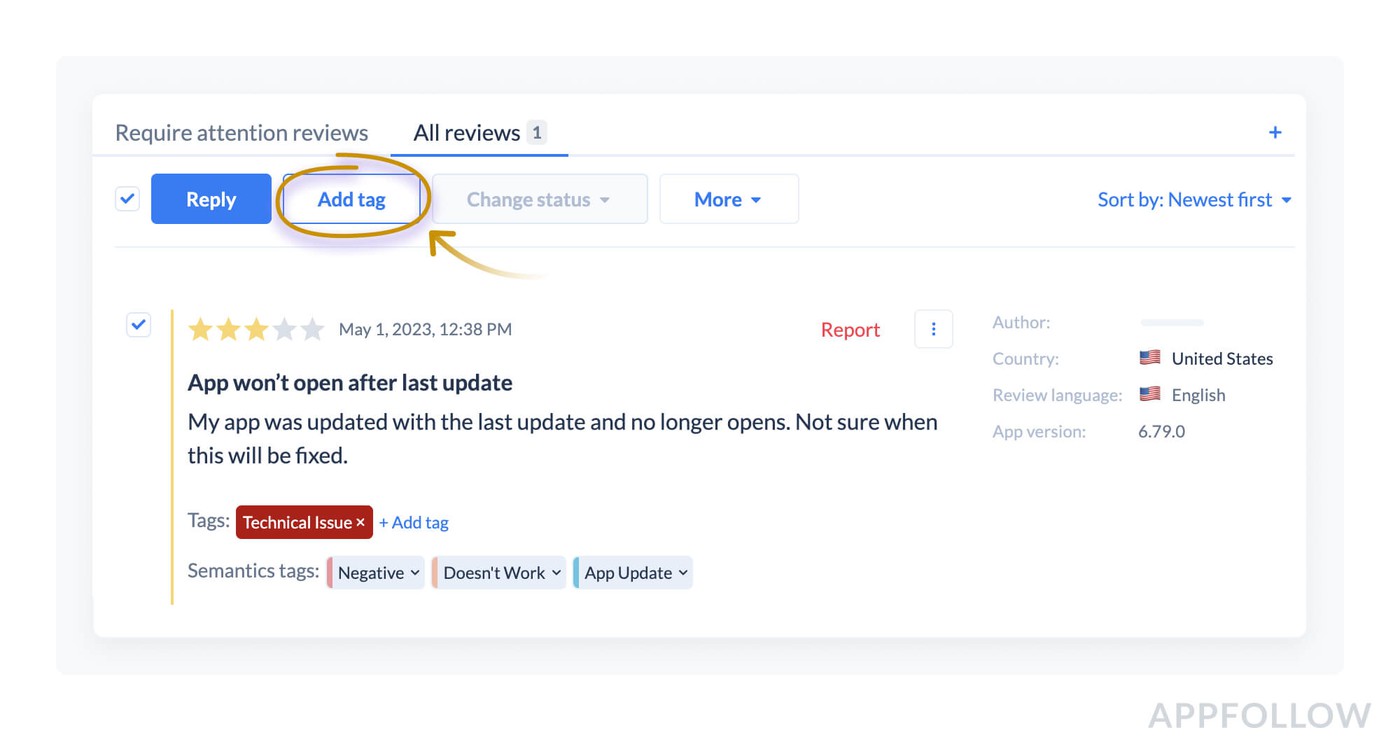

Here we follow the case above: following a big update with a new ad monetization policy inside the app, we’ve discovered 17 negative reviews with an average rating of 1.76, which all fall under Review type #1 above (i.e., complaints about ad frequency). You can group all of these together by using the review text keyword filter.

Next, let’s select this entire review cohort, using the Bulk Actions tool in AppFollow, to send out a template response to all selected users at the same time. In just a couple of clicks, you’ve been able to address each user with complaints about ad frequency — helping your Support team save a huge amount of time and resources.

Applying this approach to all selected review sub-topics (1-2-3) can save your Support team time and reduce stress.

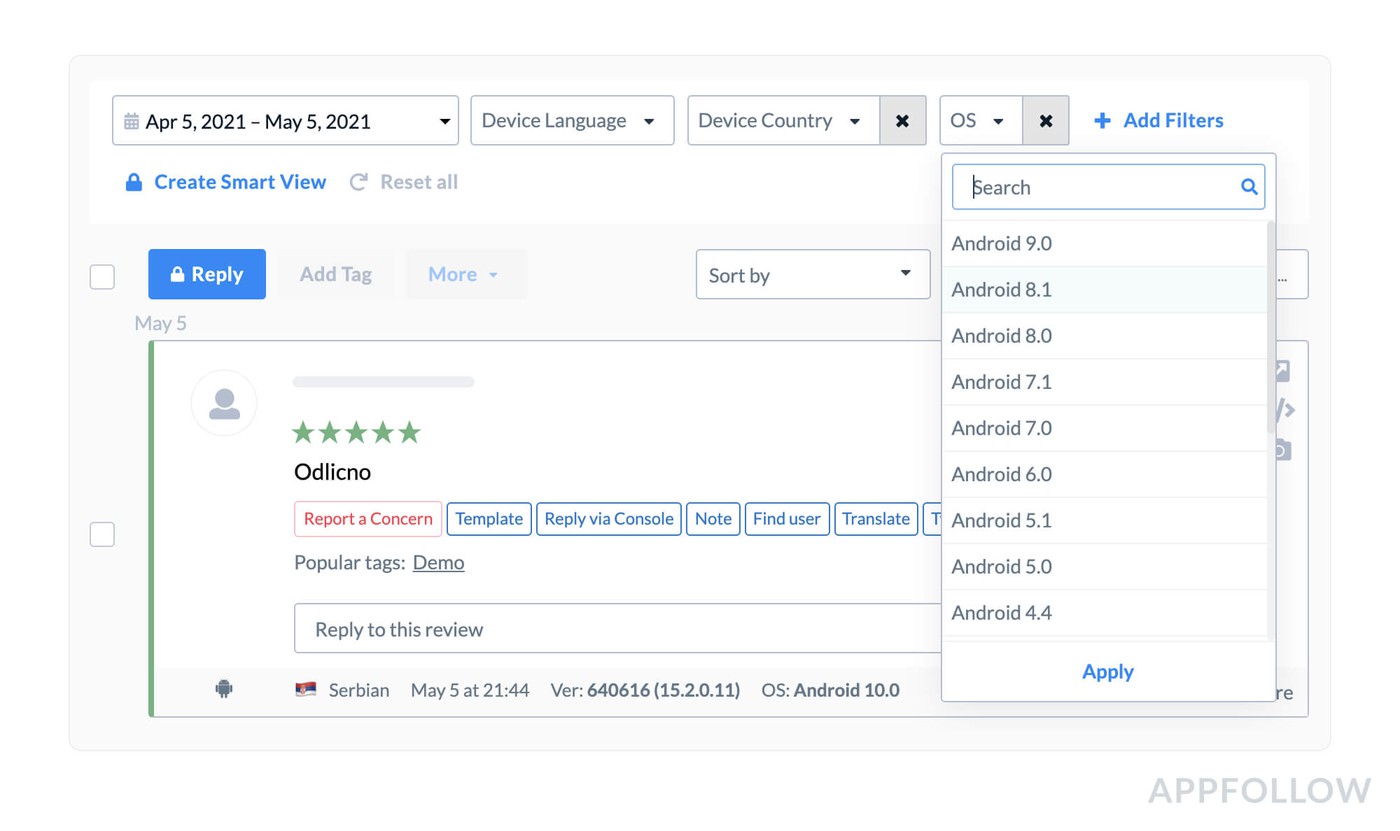

This tactic can be used with every following review group by segmenting the reviews by language, country, and issue type. Using auto-replies with similar filtering can safeguard you from a similar situation in the future. We will highlight how to do this in the following parts of AppFollow Academy.

Now, let’s take a look at another use case:

Second use case

The first thing to bear in mind is to stay calm - every product out there has had this happen at least once in its history. Developers also have the advantage of knowing precisely what issue users are discussing. If the issue is the same, they don’t need to waste time troubleshooting the problem. Instead of spending time identifying the issue, developers can promptly use that time - and their resources - to develop their response strategy.

The Support team’s review strategy will vary from case to case. If, for example, the issue is already being dealt with, you can try to neutralize negativity. You can narrow down your user base to respond to using the analysis tactic and filters explained above. Start by determining the critical regions and languages, considering the case above - filter reviews by the specific released version affected. This will speed up the segmentation process.

Once you’ve chosen the correct cohort and discussed the timeframes / other conditions for the hotfix with your Product Manager, you can begin to format your response template. Based on our experience, users respond well to reasonably generic apology replies that promise to fix the issue in the next app version that will be released soon.

Now that your template is ready to go, there’s one more thing to bear in mind before closing the issue. As negative reviews are coming in thick and fast, it’s important to provide a superior customer experience - and keep users posted as soon as the fix is deployed. A good solution here would be to tag every related review with a certain name (for example, like “Bug 01/05”) - this will help you to come back to these reviews after the hotfix is live and let them know about it.

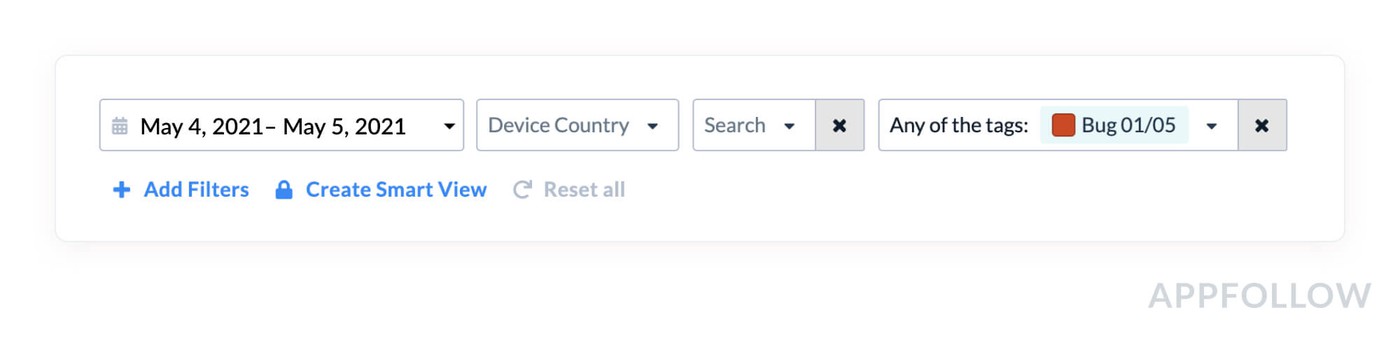

You can return to this user review cohort with the Tag filter:

Follow-up responses once a fix is live are standard practice in the app stores, and users will be notified via email that their review has received a new developer response. This also increases the likelihood of users updating their reviews and initial rating.

We hope the use cases above have helped show how to cut your Support team’s workload during critical scenarios and how to gain valuable insight from these spikes in your user reviews. In the following articles, we’ll discuss how to work with the constant number of reviews and set up the automation process via review tagging, auto-replies, and the foundations for complete automation of the first and second lines of the Support team.