How Kolibri Games used AppFollow's automation to reply to reviews and created clarity of customer data

Response time was reduced by

88%

Response rate saw a percent increase

of 75%

Reply effect

+0.72

"AppFollow automatically organizes actionable insights for us right away, with little time or resources on our side needed."

Lauren Wade, Head of Community Management at Kolibri Games

It’s recently ventured into the cloud gaming space and continues to add titles for mobile. In 2020, Ubisoft acquired Kolibri Games, which has only helped it expand and grow faster as a publisher. With over 100 million downloads and 650k five star ratings, it’s a strong leader in the ‘idle games’ genre.

01

Quickly organize insights and decipher patterns from customer data

02

Ensure that user feedback could be smoothly shared with relevant teams

03

Reply to repetitive reviews without wasting support agents’ time

04

Tackle the huge amount of incoming reviews with finite human resource

Kolibri Games’ portfolio is almost exclusively casual games, which are all very accessible, making them open to a diverse and global audience. As a result, they receive a huge volume of reviews from players for various reasons — especially for their flagship title.

They had an issue that a big chunk of reviews were often repetitive, with little value or insight for the development teams. The only action needed for these reviews was a short response of acknowledgement.

But Kolibri Games didn't want to assign someone to spend a part of their daily life doing a rewarding and important, but nevertheless, menial task.

As a data-driven company, Kolibri Games wanted to collect actionable player feedback. However, they didn't have the time or human resources to manually collect and organize customer data from the volume of reviews coming through. Without proper infrastructure or technology to help them do this task, actually collecting and sharing valuable feedback from players across the business was close to impossible.

Kolibri went looking for an automation solution to help them with their needs. They quickly decided that AppFollow was the best fit.

Firstly, they loved being able to manage both app stores from one interface, simplifying their support processes while keeping it consistent across stores.

They could also delegate lots of simple support workflows to auto-replies — using bulk reply and templated automatic responses to simple positive feedback or repetitive reviews. This meant that their agents could now spend their time focused on tackling reviews with more complex or emotion-filled issues requiring a human touch.

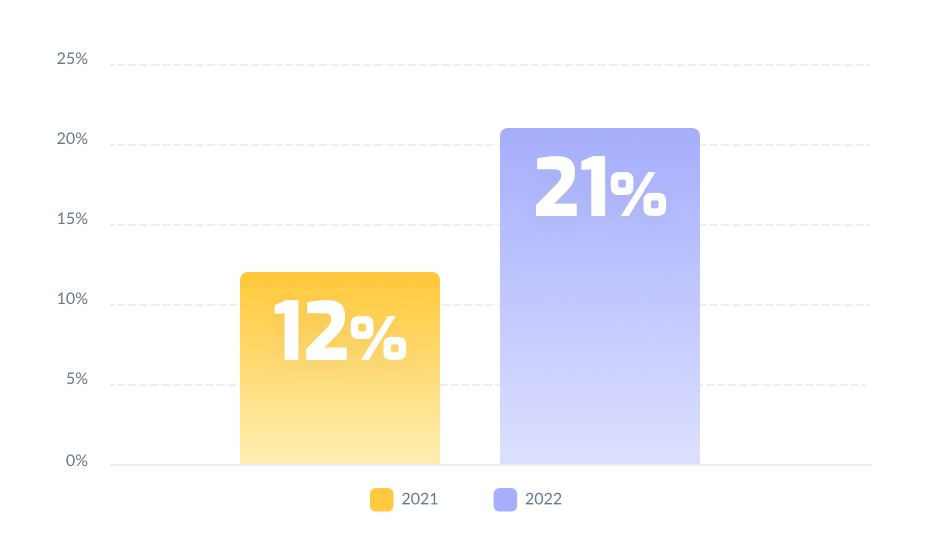

Average review response time for Idle Factory Tycoon

AppFollow’s auto-tags allowed Kolibri to categorize reviews based on topics or words. Once organized into categories, these reviews could be easily shared with other teams across the business based on the tag. Naturally, each team would be concerned about different topics based on their roles and responsibilities. In AppFollow, they could filter tags to find exactly what insights they were looking for.

The beauty of AppFollow’s review categorization was that Kolibri could customize the rules for each tag. They knew best what would be mentioned by their players about issues, pieces of content, or a mechanic that needed monitoring. Having this ability to create tags based on those words meant they could quickly extract those particular reviews for analysis as they came in.

Kolibri Games no longer needs an agent to spend hours sifting through reviews and adding them to Google Sheets. With auto-tagging, the job of organizing reviews is done for them instantly and in real time. What was once a laborious, time-sapping job is done automatically.

Easy-to-interpret visual representations of the data are generated automatically from AppFollow. This means Kolibri Games doesn't need to spend time creating data visualizations from scratch — it’s already there in AppFollow, and ready to be analyzed.

As a result, the team has a much clearer overview of how the games are performing, and they can easily share these visuals in reports. This means there’s more alignment and shared knowledge across the business, as information silos just don't exist.

But organizing insights isn't the only use of auto-tags for Kolibri Games. They use them to group similar reviews together for the purpose of replying to them all at once, using bulk replies with response templates. Via tagging, Kolibri Games categorizes all issues that only require simple responses as lower-value issues and assigns them to automation.

The team can then focus all their efforts on the higher priority tag categories, which require the interpersonal skill of a human. This means the business effectively splits its resources while continuing to provide a consistent player experience.

Before adopting automation, specifically auto-replies, Lauren Wade, Head of Community Management at Kolibri Games, was somewhat hesitant about automation. She worried that using it would take away the human touch of support or potentially ruin the quality of help provided and take away from the player experience.

When speaking with Lauren, she said that what players expect from brands has changed, especially since the pandemic. They want their experience with games and apps to be flawless. Issues and queries are expected to be dealt with fast and conveniently.

So, along with the volume and pace of incoming reviews only increasing each quarter, Kolibri faced higher expectations for convenience by players. This made the case for adopting automation even stronger.

Lauren realized that whatever initial hesitations she may have had about automation, rejecting it outright and ignoring its potential benefits was just silly. They soon learned that avoiding damage from automation on customer support quality was to do with understanding where it worked best and where it didn't.

A big part of finding value in automation was properly delegating appropriate tasks. The quality of the responses from automation depended on how rules and triggers were created, and according to what conditions.

What Kolibri Games did was to stress-test auto-reply over the Christmas and Easter holidays. There would be fewer human resources available during these times of the year, and more players would be active in the games.

So they set auto-replies to deal with 100% of reviews that came in over these periods to see how it would manage and what would happen to their average rating, sentiment score, and CSAT.

The result? Keep scrolling👇

Barely a handful of players acknowledged or seemed to notice that automation was responding to their reviews. After drilling into the results, Lauren found that a total of 4 reviews stated something about not liking being dealt with by automation. This is an inconsequential number of ‘negatively’ affected reviews when Kolibri gets about 900 new reviews a day coming through.

So pretty much no players were negatively impacted by this new automation strategy. In fact, within a month of the experiment, the Sentiment Score — the correlation between positive and negative reviews — increased by 4%, and the average rating was stable.

Lauren discovered that during the March experiment — on average, reviews that received a reply via automation had a positive reply effect of +0.2 versus a negative reply effect of -0.5 for reviews left without a response in that same period.

Reply effect*

Reply effect*

*Reply effect: an algorithm that creates a key metric based on whether the review was updated after the developer's reply - positively or negatively - and how much time passed between the reply and the update.

This demonstrates that providing a response via automation can create a positive effect, unlike leaving review without a response, which can often negatively affect the rating of a review. The value for Kolibri from this experiment was that they now felt more confident that automation could be relied on when they had fewer human resources or during an influx of player reviews.